Database Management System Exam Questions and Answers

Preparing for an evaluation in the field of data organization involves mastering a range of core principles and techniques. Whether focusing on the foundational theories or tackling real-world scenarios, the key to success lies in a solid understanding of the structures and operations involved. Recognizing the importance of each element ensures a more comprehensive grasp of the subject, making it easier to approach challenging topics during assessments.

Key areas of focus often include various models for organizing information, query languages, transaction handling, and performance enhancement techniques. By delving into these topics, learners can anticipate the types of issues they may encounter in tests. A strategic approach, supported by practical exercises and theoretical knowledge, lays the foundation for achieving high results.

Preparing effectively requires more than just memorizing definitions. It involves practicing the application of concepts to different contexts, solving problems, and understanding how different components interact within a larger framework. By mastering these areas, individuals can confidently approach any challenge they may face in their academic or professional careers.

Database Management System Exam Questions and Answers

In order to excel in assessments related to data organization, it is essential to familiarize yourself with common topics and practical scenarios. These tests often involve a variety of theoretical concepts, technical challenges, and problem-solving tasks. Understanding the core principles allows for a strategic approach, enabling candidates to respond effectively to different types of queries.

Key Concepts to Focus On

Understanding the key concepts is crucial for tackling any assessment. Key areas that are frequently tested include:

- Data organization models

- SQL query writing and optimization

- Integrity constraints and data consistency

- Indexing techniques for improved performance

- Transaction handling and concurrency control

Common Problem Scenarios

Another common aspect of these tests involves solving real-world scenarios. Here are some typical types of challenges:

- Designing a relational structure for a given business case

- Writing optimized queries for large datasets

- Resolving issues related to data redundancy

- Handling transaction isolation and deadlock resolution

- Implementing proper backup strategies for data recovery

Being prepared to work through these problems with confidence can significantly improve your performance and demonstrate your understanding of the subject matter.

Key Concepts in Database Management

Mastering the essential principles behind organizing and structuring information is crucial for anyone working with large volumes of data. A deep understanding of these core ideas allows for efficient design, maintenance, and optimization of storage systems, ensuring that data remains accessible, consistent, and secure. The following concepts are foundational for approaching related challenges.

Data Organization Models

The method in which information is stored, retrieved, and related is at the heart of any efficient system. Common models include:

- Relational model: Focuses on organizing data into tables with rows and columns, simplifying data relationships.

- Hierarchical model: Structures data in a tree-like format, with a single parent for each record.

- Network model: Allows more complex relationships between entities with multiple parent-child links.

- Object-oriented model: Integrates data as objects, similar to how data is represented in programming languages.

Data Integrity and Consistency

Ensuring the accuracy and consistency of information is a critical aspect. This includes applying various constraints that prevent incorrect or redundant data from being entered into the system. These constraints might include:

- Primary keys: Unique identifiers for each record.

- Foreign keys: Ensure relationships between different tables.

- Check constraints: Limit data values to a specific range or type.

- Not null constraints: Ensure certain fields are always filled.

By applying these constraints effectively, data remains consistent, reliable, and ready for processing. Proper integrity practices are essential for supporting data reliability over time.

Understanding Relational Databases

Relational models represent one of the most widely used approaches for organizing information, where data is stored in structured tables. These tables are linked together through common elements, allowing for efficient data retrieval, modification, and management. The design and structure of these models make it easier to maintain relationships between different sets of information.

Key Features of Relational Models

The core elements that define the relational approach include:

- Tables (Relations): Data is stored in tables made up of rows and columns, where each row represents a record, and each column represents a specific attribute of that record.

- Primary Keys: Each table has a unique identifier, ensuring each record can be uniquely identified within that table.

- Foreign Keys: These are used to link tables together by referencing the primary key of another table, establishing relationships between them.

- Normalization: The process of organizing data to minimize redundancy and dependency, ensuring efficiency and consistency.

Relationships Between Tables

One of the primary advantages of relational models is the ability to establish clear relationships between different sets of data. These relationships typically come in three types:

- One-to-One: Each record in one table is linked to exactly one record in another table.

- One-to-Many: A record in one table can be linked to multiple records in another table, but not the other way around.

- Many-to-Many: Records in one table can be linked to multiple records in another table, and vice versa. This is typically handled through a junction table.

By understanding these fundamental relationships, you can more effectively design and manipulate data structures to meet complex requirements and ensure that information flows smoothly across the system.

Types of Database Models Explained

There are various approaches for organizing and structuring information within a storage system. Each model offers unique ways to represent relationships between data, catering to different needs and use cases. Understanding these models helps in choosing the right approach for particular tasks, optimizing both performance and data integrity.

Relational Model

The relational model is one of the most popular and widely used methods. In this approach, data is organized into tables, where each row represents a record, and each column represents an attribute of that record. This model uses primary keys to uniquely identify records, and foreign keys to establish relationships between tables.

- Advantages: Simple to understand, easy to query with SQL, supports strong data integrity through constraints.

- Disadvantages: Can become inefficient for very large datasets with complex relationships.

Hierarchical Model

In the hierarchical model, data is organized in a tree-like structure, with records connected through parent-child relationships. Each child record has one parent, but a parent can have multiple children. This structure is ideal for applications where data has a clear hierarchical organization.

- Advantages: Fast access for hierarchical data, simple design for certain use cases.

- Disadvantages: Difficult to handle many-to-many relationships and can be rigid in structure.

Network Model

The network model extends the hierarchical model by allowing more complex relationships. In this model, records can have multiple parents, forming a graph-like structure. This flexibility allows it to represent many-to-many relationships more effectively.

- Advantages: Supports complex relationships and offers flexibility in data representation.

- Disadvantages: More difficult to design and manage compared to the relational model.

Object-Oriented Model

The object-oriented model combines database storage with object-oriented programming principles. It stores data as objects, similar to how data is represented in programming languages like Java or C++. This model is particularly useful for applications that require complex data structures and behavior.

- Advantages: Suitable for complex data types, supports inheritance, encapsulation, and polymorphism.

- Disadvantages: More complex to implement and can be less efficient for simpler tasks.

Choosing the right model depends on the specific requirements of the application. Understanding these models allows for better decision-making when designing systems that manage large volumes of information.

Database Normalization and Its Importance

Normalization is a critical process in structuring data to reduce redundancy and improve efficiency. By organizing information into smaller, more manageable pieces, it ensures data consistency and eliminates unnecessary duplication. The goal of normalization is to break down large, complex tables into simpler ones, making it easier to maintain, query, and update data.

The normalization process involves applying a series of rules called normal forms. These normal forms guide the organization of data, ensuring that it is logically structured and free from anomalies. Each successive normal form addresses specific types of issues that can arise from poor design or data duplication.

Stages of Normalization

The most common stages of normalization are:

| Normal Form | Description |

|---|---|

| First Normal Form (1NF) | Eliminates duplicate columns and ensures that each column contains atomic values (indivisible). Data is stored in a table format without repeating groups. |

| Second Normal Form (2NF) | Eliminates partial dependency, meaning that all non-key attributes are fully dependent on the primary key. It ensures no redundancy based on non-key attributes. |

| Third Normal Form (3NF) | Removes transitive dependencies, meaning that non-key attributes do not depend on other non-key attributes. It ensures that each attribute is only related to the primary key. |

By applying these rules, the design becomes more streamlined, reducing potential errors during data manipulation and improving query performance. However, achieving the highest level of normalization is not always necessary, as sometimes performance considerations may require denormalization for faster access.

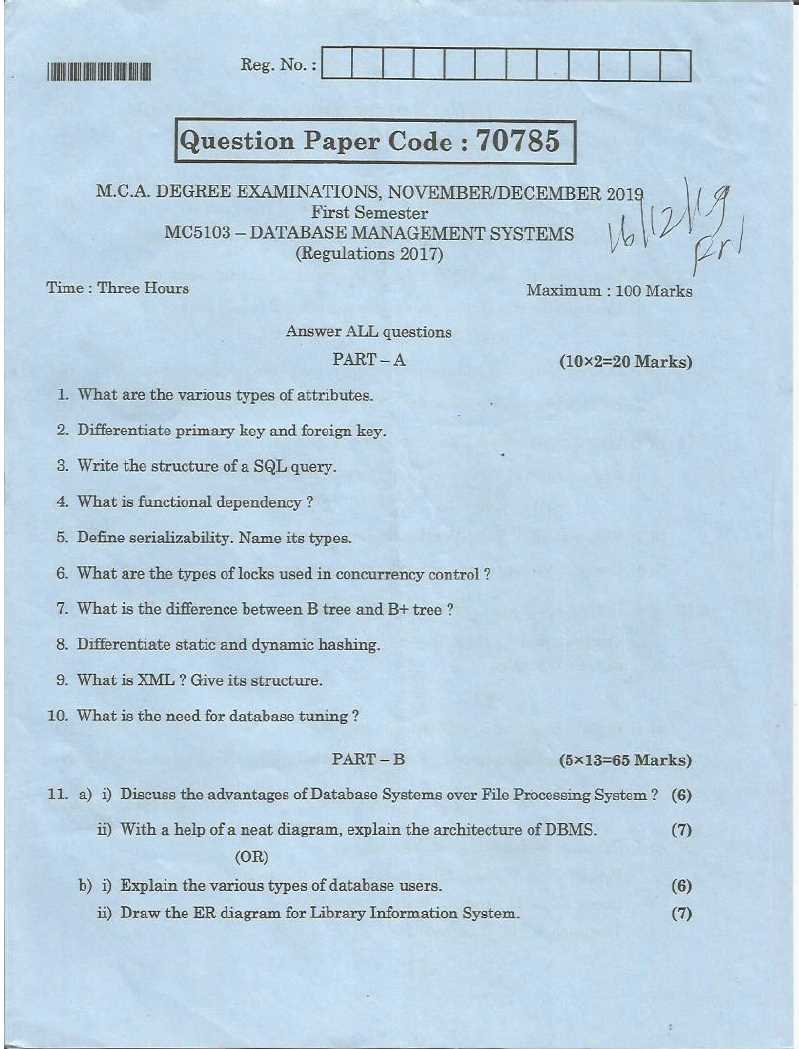

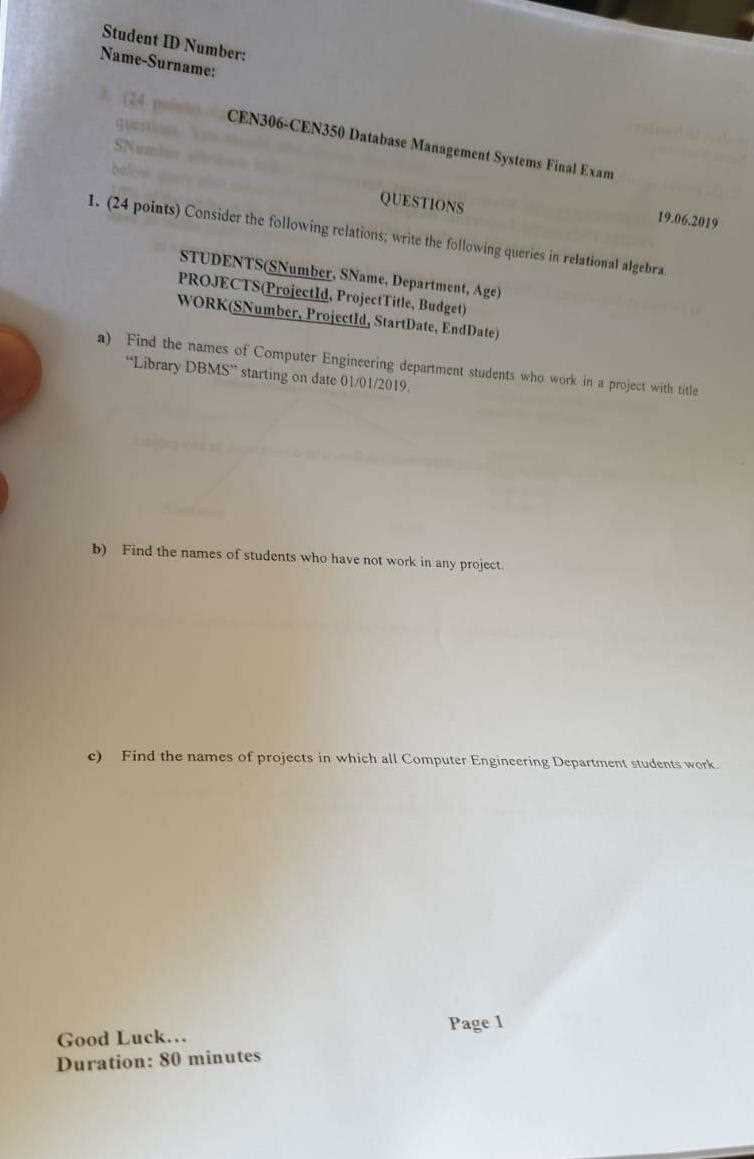

SQL Queries: Common Exam Questions

In any test related to working with relational data, queries form a central part of the assessment. Being able to write efficient and correct SQL commands is crucial for solving problems and extracting meaningful insights. Commonly, the tasks revolve around retrieving, updating, or manipulating data from different tables, often requiring the application of multiple SQL techniques.

Popular Query Types

Here are some of the most commonly encountered types of queries that are tested:

| Query Type | Description |

|---|---|

| SELECT | Used to retrieve data from one or more tables, with the ability to filter and sort the results based on specific conditions. |

| INSERT | Allows new records to be added to a table. |

| UPDATE | Modifies existing records in a table based on a specific condition. |

| DELETE | Removes records from a table according to a specified condition. |

| JOIN | Combines data from two or more tables based on a related column, allowing for more comprehensive queries. |

Common Scenarios

Common problems may involve tasks such as filtering records, grouping data, or using subqueries. Some typical scenarios include:

- Retrieving all records from a table where certain conditions are met, using the WHERE clause.

- Sorting the results of a query in ascending or descending order using the ORDER BY clause.

- Aggregating data, such as counting records or calculating sums, using functions like COUNT, SUM, and AVG.

- Combining results from multiple tables using INNER JOIN or LEFT JOIN.

Mastering these common types of queries will help in solving problems quickly and efficiently, which is essential for performing well in related assessments.

Transactions and Concurrency Control

In environments where multiple users interact with the same data, ensuring that operations are executed consistently and efficiently is critical. Transactions are fundamental units of work, ensuring that changes to the data are reliable, while concurrency control mechanisms handle the challenges posed by simultaneous access to the same data. Both are essential for maintaining data integrity and avoiding conflicts during concurrent operations.

Key Properties of Transactions

Transactions are defined by a set of properties, known as ACID, which ensure that the data remains consistent even in the face of errors or system failures:

- Atomicity: Ensures that all operations within a transaction are completed successfully, or none are applied.

- Consistency: Guarantees that the system transitions from one valid state to another, maintaining all rules and constraints.

- Isolation: Ensures that the execution of one transaction is independent of others, even if they run concurrently.

- Durability: Guarantees that once a transaction is committed, its results are permanently stored, even in the case of a failure.

Concurrency Control Methods

As multiple transactions are processed at the same time, ensuring that they do not interfere with each other is crucial. Several concurrency control methods are commonly used to avoid issues like lost updates, temporary inconsistency, or deadlock:

- Locking: Locks are placed on data to prevent other transactions from accessing the same data simultaneously. This method can be exclusive or shared depending on the level of access needed.

- Timestamp Ordering: Each transaction is assigned a timestamp, and the system ensures that transactions are executed in timestamp order to avoid conflicts.

- Optimistic Concurrency Control: This method allows transactions to execute without locking resources, but checks for conflicts before committing changes, rolling back if any issues are detected.

By using these mechanisms, systems can manage simultaneous operations, ensuring that data remains accurate and consistent despite high levels of concurrency. Proper transaction handling is essential for systems that require high availability and reliability, such as financial applications or e-commerce platforms.

Indexing and Performance Optimization

Efficient access to large sets of information is crucial for performance in any application. Indexing is a technique used to speed up retrieval times by creating data structures that allow for quick searches. Along with indexing, performance optimization encompasses a variety of strategies aimed at improving the speed and efficiency of operations, from query execution to resource management.

Creating the right indexes ensures that the system can quickly locate specific data without having to scan entire datasets. However, it is essential to balance between creating enough indexes for efficient queries and not overloading the system with unnecessary ones, which can slow down write operations.

Types of Indexes

Various types of indexes are used depending on the data structure and the queries being executed:

- Single-Column Index: A basic index that is created on a single column. It is useful when queries involve filtering or sorting by a single attribute.

- Composite Index: An index created on multiple columns. It is efficient when queries use multiple columns in WHERE or JOIN conditions.

- Unique Index: Ensures that all values in the indexed column are unique. This type of index is often used to enforce data integrity.

- Full-Text Index: Used for indexing large text fields, allowing for fast searches based on keywords or phrases.

Optimization Techniques

Aside from indexing, there are several optimization strategies to improve overall performance:

- Query Optimization: Writing efficient queries that avoid unnecessary joins or subqueries can reduce execution time significantly. Analyzing query execution plans helps in identifying bottlenecks.

- Data Partitioning: Dividing large datasets into smaller, more manageable partitions can help optimize both query performance and maintenance tasks.

- Denormalization: Sometimes, performance can be improved by reducing the number of joins, even if it means introducing some redundancy in the data structure.

- Caching: Caching frequently accessed data reduces the need for repeated queries, improving response times.

By effectively combining indexing with these optimization methods, systems can handle large amounts of data with improved speed, ensuring better performance even as data scales.

Database Security: Key Practices

Protecting sensitive information is essential in any system that stores or processes valuable data. Security practices are crucial for safeguarding data from unauthorized access, manipulation, and breaches. A robust security framework ensures data integrity, confidentiality, and availability, making it resilient against potential threats and attacks.

Authentication and Authorization

To prevent unauthorized users from gaining access to sensitive data, two primary security measures are enforced: authentication and authorization. Authentication verifies the identity of users, ensuring that only authorized individuals can access the system. Authorization controls what actions authenticated users can perform on the data, ensuring that they can only access or modify the data they are permitted to.

- Multi-Factor Authentication: Adding extra layers of security by requiring multiple forms of identification (e.g., password and biometric verification).

- Role-Based Access Control: Assigning permissions based on user roles, ensuring that users only have access to the resources necessary for their tasks.

- Encryption: Protecting data by converting it into an unreadable format that can only be deciphered by authorized users with the correct decryption key.

Monitoring and Auditing

Ongoing monitoring and auditing are essential practices to detect suspicious activities and ensure compliance with security policies. Regular audits track user actions, ensuring that only legitimate access occurs. Continuous monitoring helps identify potential threats in real time, allowing for rapid responses to any unusual activities.

- Activity Logging: Maintaining logs of user activity provides a record of actions taken, making it easier to trace unauthorized or suspicious actions.

- Real-Time Alerts: Setting up alerts for specific actions or patterns of behavior that may indicate a security breach.

- Vulnerability Scanning: Regularly scanning the system for potential weaknesses, such as outdated software or misconfigurations, that could be exploited by attackers.

By implementing these practices, systems can enhance their resilience to external and internal threats, ensuring that sensitive information remains secure and protected from unauthorized access.

Database Backup and Recovery Techniques

Protecting data against loss or corruption is a critical aspect of any system that handles important information. Effective backup and recovery strategies ensure that data can be restored to a consistent and functional state in the event of a failure. These practices are essential for minimizing downtime and preventing data loss caused by accidental deletion, system crashes, or malicious attacks.

Types of Backups

There are several methods for creating backups, each suited to different needs and recovery objectives. Choosing the appropriate backup strategy depends on the volume of data, the frequency of changes, and the required recovery time.

| Backup Type | Description |

|---|---|

| Full Backup | Creates a copy of the entire data set, ensuring all files are backed up at once. It provides the most complete protection but requires the most storage space and time. |

| Incremental Backup | Only the changes made since the last backup are saved. It uses less storage space and completes faster, but recovery requires all previous backups. |

| Differential Backup | Backs up all changes since the last full backup, making recovery faster than with incremental backups. However, it requires more storage over time. |

| Mirror Backup | Creates an exact copy of the original data, without compression or deduplication, providing quick access to backup files but requiring substantial storage capacity. |

Recovery Strategies

Effective recovery techniques are essential for minimizing downtime and ensuring that the system can quickly return to a functional state. Different strategies provide varying levels of granularity and speed in restoring data:

- Point-in-Time Recovery: Restores data to a specific moment, such as before a system failure or a data corruption event, allowing for precise recovery.

- Rollback Recovery: Reverts the system to the most recent consistent state, undoing any incomplete transactions or changes that may have occurred after the last backup.

- Hot Backup: Allows for backups to be taken while the system is still running, ensuring minimal disruption to users.

- Cold Backup: Performed when the system is offline, ensuring that the backup is taken from a consistent and stable state.

By regularly backing up data and using appropriate recovery methods, organizations can protect themselves from data loss and ensure that they are prepared to restore operations quickly and efficiently in the event of a failure.

Data Integrity and Constraints in DBMS

Ensuring the accuracy, consistency, and reliability of data is fundamental in any system that processes valuable information. To maintain data integrity, it is essential to establish rules and constraints that guide how data is entered, modified, and stored. These mechanisms help prevent errors, inconsistencies, and invalid entries, thereby ensuring that data remains accurate and trustworthy throughout its lifecycle.

Types of Data Integrity

There are several aspects of data integrity that need to be managed to guarantee the correctness and validity of the information. The most commonly addressed types are:

- Entity Integrity: Ensures that each record in a table is unique and identifiable, typically enforced through primary keys.

- Referential Integrity: Guarantees that relationships between tables are maintained, ensuring that foreign keys accurately point to valid primary key values in related tables.

- Domain Integrity: Ensures that data entered into a field matches the defined constraints for that field, such as data type or value range.

- User-Defined Integrity: Allows for the creation of custom rules specific to the application’s needs, ensuring that business logic is followed in data handling.

Common Constraints

Constraints are the rules that enforce the integrity of the data and limit the types of values that can be entered or modified. These are some of the most commonly used constraints:

- Primary Key: Ensures that each record in a table is unique and identifiable, preventing duplicate entries.

- Foreign Key: Establishes a link between two tables by enforcing that a value in one table must exist as a valid reference in another table.

- Unique: Ensures that all values in a given column are unique, preventing duplicate data entries.

- Check: Validates that data entered into a column meets specific conditions or constraints, such as ensuring that a value is within a specified range.

- Not Null: Enforces that a field cannot contain null values, ensuring that data must always be provided for certain columns.

By implementing these constraints, systems can ensure that the data remains accurate, consistent, and reliable, making it easier to maintain data quality and prevent errors. Proper enforcement of these rules helps create a stable environment for processing and analyzing information.

Advanced Database Design Principles

Effective design is crucial for ensuring the scalability, efficiency, and flexibility of any information-handling environment. Advanced design principles focus on optimizing the structure, relationships, and data flow within a system to support complex queries, high performance, and easy maintenance. These principles guide the creation of a robust architecture that aligns with both business needs and technical requirements, ensuring smooth data integration and retrieval.

Normalization for Efficient Design

One of the core principles in advanced design is normalization, which aims to reduce redundancy and ensure data integrity. This process involves organizing data into multiple related tables, eliminating unnecessary duplication. By adhering to different normal forms, such as 1NF, 2NF, and 3NF, designers can ensure that data remains consistent and efficient to manage.

- First Normal Form (1NF): Ensures that all columns contain atomic values and there is no repeating group of columns.

- Second Normal Form (2NF): Builds upon 1NF by removing partial dependencies, ensuring that non-key attributes are fully dependent on the primary key.

- Third Normal Form (3NF): Ensures that all attributes are functionally dependent only on the primary key and eliminates transitive dependencies.

Denormalization for Performance

While normalization optimizes data integrity, it can sometimes create performance bottlenecks due to excessive table joins in complex queries. Denormalization, the process of combining tables or adding redundant data, is often used to improve query performance by reducing the number of joins required. However, this technique should be used judiciously to avoid introducing unnecessary data redundancy and complexity.

Entity-Relationship Modeling

Entity-relationship (ER) modeling is another advanced principle that focuses on visually representing the relationships between different entities in a system. ER diagrams help to define how data points interact, providing a clear map of how tables are interconnected. This approach simplifies the process of understanding and maintaining large, complex data structures.

Scalability and Partitioning

As data volumes grow, scalability becomes a critical factor in design. Advanced techniques such as horizontal partitioning (sharding) and vertical partitioning allow large datasets to be divided into smaller, more manageable pieces. These techniques enable the system to handle higher loads and ensure that performance remains optimal even as the amount of data increases.

By incorporating these advanced design principles, developers can create systems that are not only efficient but also flexible and scalable, able to handle both current and future demands. These practices help to ensure that the system remains robust, responsive, and capable of supporting a wide variety of business applications.

Understanding ER Diagrams for Exams

Entity-Relationship (ER) diagrams are fundamental tools used to represent the structure of data and its relationships. Understanding how to read and create these diagrams is crucial for those preparing for assessments in the field of data organization and structure. ER diagrams visually depict the entities within a system, their attributes, and the relationships between them, offering a clear overview of the system’s design. Mastering these diagrams helps in both understanding the underlying data structure and designing systems that are efficient and coherent.

Components of an ER Diagram

ER diagrams are composed of several key elements that form the building blocks of the structure. These elements allow the creation of a blueprint for the organization and interrelation of data. Below are the primary components:

- Entities: These are objects or concepts that have stored information. An entity can represent a person, place, thing, or event.

- Attributes: Characteristics or properties that describe entities. For instance, an entity “Employee” may have attributes like “Employee_ID” or “Name”.

- Relationships: These show how entities are linked together. A “Works_For” relationship could link an employee to the department they work for.

Types of Relationships

In an ER diagram, relationships can vary based on the number of entities they involve. Understanding these types is key for interpreting and constructing ER diagrams effectively.

- One-to-One (1:1): A relationship where one entity is associated with only one instance of another entity.

- One-to-Many (1:N): A relationship where one entity can be related to multiple instances of another entity.

- Many-to-Many (M:N): A relationship where multiple instances of one entity can be related to multiple instances of another entity.

Creating ER Diagrams

When constructing an ER diagram, it is important to follow a clear, structured approach. Here are some general steps to consider:

- Identify the Entities: Start by listing all the key objects or concepts that the diagram will represent.

- Define the Attributes: For each entity, determine what properties need to be represented.

- Establish Relationships: Identify how each entity relates to others and define the nature of these relationships.

- Draw the Diagram: Use standardized symbols (rectangles for entities, ovals for attributes, diamonds for relationships) to draw the diagram, ensuring clarity and readability.

Practical Tips for ER Diagrams

When studying ER diagrams, keep the following tips in mind to improve both understanding and performance in assessments:

- Practice Drawing: The more you practice creating ER diagrams, the more intuitive it will become. Use real-world examples to build familiarity.

- Review Entity Relationships: Pay special attention to how entities interact, as this will help in identifying the correct types of relationships.

- Understand Cardinality: Knowing the cardinality (the number of instances) of each relationship will help in interpreting the diagram correctly.

Mastering the creation and interpretation of ER diagrams will enhance your ability to structure complex data models efficiently. With practice, these diagrams become powerful tools for understanding how data is organized and interconnected, ultimately leading to better design and analysis skills in the field of data architecture.

Normalization Forms and Their Use

Normalization is a process that helps ensure the efficiency and consistency of data organization by minimizing redundancy and dependency. By applying certain forms, the structure of the data is refined to make it more efficient and easier to maintain. These forms are a set of guidelines or rules that allow the design of well-structured and optimized data models. Understanding these forms is essential for anyone looking to create systems that are scalable and easy to query.

First Normal Form (1NF)

The first step in normalization is to ensure that the data is in the first normal form. This form requires that:

- Each table contains only atomic values, meaning no multiple values or arrays in a single field.

- All entries in a column must be of the same type.

- Each record must be uniquely identifiable, typically by a primary key.

In 1NF, the focus is on eliminating repeating groups and ensuring that each piece of data is stored in its most basic form.

Second Normal Form (2NF)

Once a structure is in 1NF, the next step is to achieve the second normal form. This form involves:

- Removing partial dependencies, which occur when a non-prime attribute depends on part of a composite primary key.

- Ensuring that all non-key attributes are fully dependent on the entire primary key.

2NF refines the structure further by ensuring that every non-key attribute is functionally dependent on the whole primary key, making it easier to maintain data consistency.

Third Normal Form (3NF)

The third normal form goes a step further by eliminating transitive dependencies. In this form:

- No non-prime attribute should depend on another non-prime attribute.

- All attributes should depend directly on the primary key, with no intermediary dependencies between non-key fields.

3NF helps in removing any indirect relationships that can lead to unnecessary data duplication, thus improving the overall design of the model.

Higher Normal Forms

Beyond the third normal form, there are additional stages of normalization that address more complex issues, such as:

- Boyce-Codd Normal Form (BCNF): A stricter version of 3NF, where every determinant is a candidate key.

- Fourth Normal Form (4NF): Addresses multi-valued dependencies, ensuring that no record contains more than one independent multivalued fact.

- Fifth Normal Form (5NF): Deals with join dependencies, ensuring that every join is a lossless join.

These higher normal forms further enhance the design of a data model by addressing edge cases that may not be covered by the basic forms.

Benefits of Normalization

- Elimination of Redundancy: Normalization helps remove unnecessary data duplication, making the system more efficient.

- Improved Consistency: By organizing data according to these forms, inconsistencies and anomalies are reduced.

- Better Maintenance: Well-normalized data structures are easier to update and maintain, as changes are made in fewer places.

- Efficient Queries: Structured data improves query performance, as there is less redundant information to process.

By understanding and applying normalization forms, data designers can create optimized models that ensure high performance, data integrity, and scalability in their applications.

How to Tackle DBMS Case Studies

Approaching case studies related to data structure and organization requires a methodical and structured strategy. These scenarios often present real-world problems where you must apply theoretical knowledge to find practical solutions. To solve these cases effectively, it’s crucial to understand the underlying concepts, break down the problem into manageable parts, and apply the right techniques to address each challenge. Developing a clear approach will help you navigate complex situations and arrive at a well-rounded solution.

Follow these steps to tackle such scenarios efficiently:

- Understand the Problem: Begin by thoroughly reading the case study. Identify the key issues and objectives, as well as any constraints or limitations provided. Understanding the context is essential to formulating an effective approach.

- Identify Key Concepts: Highlight the fundamental principles that apply to the case. This could include data integrity rules, relationship management, or optimization techniques. Knowing which concepts to apply will guide you towards the right solutions.

- Break Down the Problem: Divide the case study into smaller, manageable components. Each part may represent different challenges, such as designing efficient queries, optimizing data structures, or ensuring consistency across data elements.

- Apply Techniques and Methods: Use the appropriate methods such as normalization, indexing, or transaction management to resolve the issues identified. Make sure each technique you apply fits the specific challenge at hand.

- Review and Optimize: Once the solution is formulated, check for redundancies, inefficiencies, or areas for improvement. Optimize your approach by ensuring minimal data duplication, ensuring consistency, and simplifying any complex processes.

By following these steps and using the right strategies, you can solve complex case studies efficiently. The key is to remain focused, apply your knowledge systematically, and constantly check for ways to improve the solution. With practice, you’ll gain the skills needed to handle any challenge that comes your way.

Exam Preparation Tips for DBMS

Preparing for a test focused on data structure, organization, and retrieval concepts can be challenging, but with the right approach, it becomes manageable. The key to success lies in understanding the core principles, practicing regularly, and reviewing important concepts that are frequently tested. A focused study strategy, combined with effective time management, will help you approach the material confidently and perform well under pressure.

Master the Fundamentals

Start by building a strong foundation in the core principles. Understand the basic terminologies, structures, and operations involved in handling data, such as relationships, keys, indexing, and normalization. These concepts are the building blocks for more complex scenarios and will help you tackle advanced topics with ease.

- Review key concepts regularly.

- Practice simple examples to solidify understanding.

- Ensure you can explain core terms clearly and concisely.

Focus on Problem-Solving Practice

Once the foundational knowledge is in place, focus on solving practice problems. These help you become familiar with the format and types of challenges you may encounter. Additionally, they allow you to apply your theoretical knowledge in practical scenarios. Use online resources, textbooks, or mock tests to practice a wide range of problems.

- Set aside time for daily problem-solving sessions.

- Focus on solving both theoretical and practical problems.

- Simulate timed conditions to enhance performance under pressure.

Finally, don’t forget to review past materials and practice under test conditions. This will help build confidence and reduce anxiety when the time comes. With dedication and consistent effort, you’ll be well-prepared to handle any challenge during the evaluation process.