Data Analysis with Python Coursera Final Exam Answers

In today’s world, mastering analytical tools and techniques is crucial for anyone pursuing a career in technology or research. By developing expertise in interpreting large datasets, individuals open doors to numerous professional opportunities. Acquiring these skills involves understanding core concepts, utilizing the right software, and applying problem-solving strategies effectively.

The path to proficiency often culminates in an assessment that evaluates your understanding and application of the skills acquired. This process can be daunting without the proper preparation, making it essential to approach it with a clear strategy. Success requires not only theoretical knowledge but also the ability to apply practical techniques efficiently in real-world scenarios.

To aid in this journey, a variety of resources are available to help learners navigate through the key topics. Mastery of key concepts, such as data manipulation, statistical techniques, and visualization methods, is fundamental. Equipping yourself with the right tools and techniques ensures success in both the test and future applications in the field.

Data Analysis with Python Coursera Final Exam Answers

Completing a comprehensive assessment is an important step in demonstrating your proficiency in interpreting and manipulating complex information. This stage evaluates your understanding of essential concepts and techniques, ensuring you’re prepared for real-world challenges. The ability to work through various tasks and present insightful results is crucial for anyone pursuing a career in this field.

Successfully navigating this test requires not just theoretical knowledge, but also practical skills. Understanding how to apply different tools to extract meaningful insights and how to approach problem-solving in a structured way are key components of success. Mastery over the course material, combined with efficient time management, will allow you to confidently handle any challenge presented.

Although it’s tempting to seek direct solutions, it’s far more beneficial to focus on the process itself–grasping the reasoning behind each task and familiarizing yourself with the underlying principles. This ensures that, beyond just passing the assessment, you will be equipped with the skills needed to thrive in future projects and work environments.

Overview of the Coursera Python Course

This course provides a structured approach to mastering key techniques for handling large datasets and extracting valuable insights. It covers the fundamentals of working with programming tools, as well as more advanced topics that are crucial for solving real-world problems. Through a combination of lectures, hands-on exercises, and practical examples, learners gain the skills necessary to perform complex tasks efficiently.

Course Structure and Content

The curriculum is designed to guide students through a series of progressively challenging tasks, starting from the basics and advancing to more specialized topics. Key areas of focus include data manipulation, statistical analysis, and effective visualization techniques. Each module builds on the previous one, reinforcing important concepts and enhancing problem-solving skills.

Learning Outcomes and Practical Application

By the end of the course, learners are expected to confidently apply the tools learned to real-world situations, solving problems and interpreting results accurately. Whether it’s for professional development or personal enrichment, the knowledge gained in this course equips participants to handle complex projects in various industries, making them valuable assets in any analytical role.

Key Skills Learned in Python Course

Throughout this course, learners develop a variety of essential skills that are crucial for handling and interpreting large datasets. The focus is on practical applications and techniques that help solve real-world challenges. By mastering these skills, individuals gain the ability to approach complex problems with confidence and efficiency.

Core Technical Competencies

The course covers several key competencies that are fundamental for anyone working in this field:

- Mastery of data manipulation techniques for handling large volumes of information.

- Expertise in using libraries and tools for statistical analysis and pattern recognition.

- Ability to transform raw data into actionable insights through visualization techniques.

- Experience in automating repetitive tasks to improve workflow efficiency.

Practical Problem-Solving Skills

In addition to technical abilities, learners acquire practical problem-solving skills that enhance their ability to work on real-world projects:

- Approaching challenges methodically to find efficient solutions.

- Building analytical workflows that can be replicated and adapted to different situations.

- Integrating external datasets into projects to enrich the scope and accuracy of results.

- Collaborating on team-based projects to share insights and develop comprehensive solutions.

Tips for Preparing for the Exam

Proper preparation is essential for succeeding in any assessment. By following a well-structured approach, you can ensure that you are equipped to tackle the challenges ahead. The key to doing well lies in understanding the material thoroughly and practicing problem-solving techniques until they become second nature.

Review Course Materials Thoroughly

Start by revisiting the course content. Make sure you have a solid grasp of the core concepts and techniques covered in the lessons. Take notes on important points, and focus on areas where you feel less confident. Pay special attention to:

- Key concepts introduced in each module.

- Examples and case studies that illustrate real-world applications.

- Any new tools or libraries that were introduced during the course.

Practice Regularly

Hands-on practice is crucial for reinforcing theoretical knowledge. Work through exercises and problems from previous lessons to become familiar with the types of tasks that may appear in the assessment. Additionally, seek out additional practice materials to further sharpen your skills.

Time Management

During the assessment, managing your time effectively is just as important as solving the problems themselves. Allocate time for each task based on its complexity, and avoid spending too much time on any one question. It’s better to move forward and come back later if needed.

Stay Calm and Confident

Lastly, approach the assessment with a calm and confident mindset. Trust in the preparation you’ve done and tackle each problem methodically. If you encounter a challenging question, break it down into smaller parts and approach it step by step.

Common Challenges in Data Analysis

Working with complex datasets presents a variety of obstacles that can make the process of deriving insights challenging. These difficulties often arise from both technical and conceptual factors, and overcoming them requires both practice and a solid understanding of best practices. Recognizing these hurdles early on can help you better prepare for the tasks at hand.

Key Obstacles to Overcome

Here are some of the most common challenges faced when dealing with large-scale information:

- Handling incomplete or missing information that can lead to inaccurate conclusions.

- Dealing with inconsistent formats or errors within the dataset that complicate processing.

- Choosing the right tools and techniques for different tasks, especially when confronted with complex problems.

- Making sense of noise in the data, which can obscure meaningful trends or patterns.

Strategies for Addressing These Challenges

While these challenges may seem daunting, there are several strategies that can help navigate them successfully:

- Utilize data cleaning techniques to handle missing values and inconsistencies effectively.

- Apply appropriate transformation and normalization methods to ensure uniformity across datasets.

- Take a systematic approach to problem-solving, breaking complex tasks into manageable steps.

- Leverage visualization techniques to identify patterns and anomalies more easily.

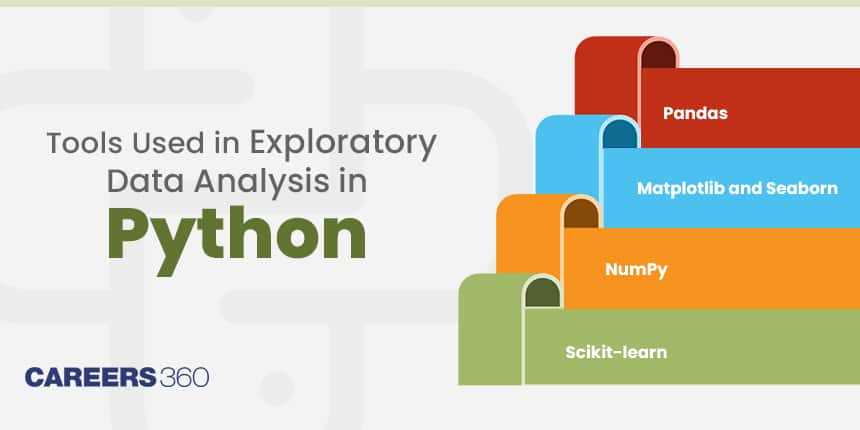

Python Libraries Used in the Course

In this program, a variety of powerful tools are introduced to help streamline and enhance the process of handling and manipulating complex datasets. These libraries are essential for performing key operations such as transforming raw information, applying statistical methods, and creating visual representations. By mastering these tools, learners can effectively manage a wide range of tasks, from basic processing to advanced modeling.

Essential Libraries for Manipulation and Processing

Several libraries are introduced that are critical for organizing, cleaning, and transforming information. These include:

- Pandas: A versatile tool for manipulating tabular data, including handling missing values and applying transformations.

- NumPy: A core library used for working with arrays, performing mathematical operations, and managing large datasets.

- Matplotlib: A fundamental library for creating static, animated, and interactive visualizations, helping to reveal patterns in the data.

Advanced Tools for Modeling and Statistical Analysis

As learners progress through the course, they are introduced to more advanced libraries that support statistical modeling and machine learning:

- Scikit-learn: A comprehensive library for machine learning that provides algorithms for classification, regression, and clustering.

- Statsmodels: A library focused on statistical modeling and hypothesis testing, essential for conducting in-depth analysis.

Importance of Data Cleaning in Python

Effective handling of large datasets requires a crucial step: ensuring that the information is consistent, accurate, and free from errors. Before diving into any analysis or modeling, it’s essential to address issues like missing values, duplicates, or inconsistencies. The process of preparing information for further use not only improves the quality of results but also ensures that the outcomes are reliable and meaningful.

Key Benefits of Cleaning Information

Cleaning your dataset helps avoid common pitfalls that can lead to incorrect conclusions or flawed models. By carefully processing and refining the data, you achieve several key benefits:

- Improved Accuracy: Ensuring that all entries are correct and consistent minimizes the chances of errors during later stages.

- Better Model Performance: Cleaning up noisy data leads to more precise predictions and analyses, improving the overall effectiveness of your models.

- Efficient Analysis: Well-prepared information speeds up the process, enabling quicker and more efficient results.

Common Steps in Cleaning Information

There are several standard practices to ensure that the dataset is ready for further use:

- Handling missing or null values appropriately through imputation or removal.

- Removing duplicates to avoid skewing results.

- Standardizing formats to ensure consistency across variables.

- Identifying and addressing outliers that may distort the analysis.

Working with Dataframes in Python

When dealing with structured information, the ability to efficiently organize, manipulate, and analyze the data is crucial. One of the most powerful tools available for this purpose is the dataframe, a versatile structure that allows you to handle tabular data easily. Mastering the use of dataframes enables you to perform a wide range of operations, from cleaning and filtering to complex transformations and aggregations.

Creating and Manipulating Dataframes

Creating a dataframe is straightforward, and it allows you to work with data in a row-column format. This structure makes it easier to perform operations like sorting, selecting specific columns, or applying transformations. Key operations you’ll often use include:

- Creating dataframes from various sources such as CSV files, dictionaries, or databases.

- Selecting specific rows and columns for more focused analysis.

- Filtering rows based on conditions or criteria that match certain patterns.

- Adding or modifying columns using vectorized operations for efficiency.

Advanced Operations with Dataframes

Once the basic operations are mastered, you can move on to more advanced tasks such as:

- Aggregating data using group-by functions to summarize and compute statistics across categories.

- Joining multiple dataframes based on common columns to merge information from different sources.

- Pivoting or reshaping data to analyze it from different angles.

- Handling missing or inconsistent data by applying various imputation techniques or dropping incomplete entries.

Visualizing Data with Python Tools

Presenting information visually can significantly enhance understanding, making patterns, trends, and insights more accessible. By using various visualization tools, you can transform raw figures into clear, interpretable graphics. These tools not only help you communicate findings effectively but also allow you to explore complex relationships within your datasets in an intuitive way.

Popular Visualization Libraries

There are several widely used libraries that make the task of creating visuals much easier:

- Matplotlib: A powerful tool for creating static, animated, and interactive graphs. It offers extensive customization for creating high-quality charts, from simple line plots to complex scatter plots.

- Seaborn: Built on top of Matplotlib, Seaborn provides a high-level interface for creating aesthetically pleasing and informative statistical graphics.

- Plotly: A dynamic library that enables interactive charting and dashboard creation, allowing users to zoom, hover, and explore visualizations in real time.

Common Visualization Types

Depending on the nature of the information, different types of visuals are more appropriate. Some of the most commonly used chart types include:

- Bar Charts: Ideal for comparing quantities across different categories.

- Line Charts: Great for illustrating trends over time or continuous data.

- Heatmaps: Useful for showing relationships between variables with color gradients.

- Scatter Plots: Best for visualizing correlations or distributions between two numerical variables.

How to Solve Exam Questions Efficiently

Approaching a set of questions during an assessment can often feel overwhelming. However, a structured strategy can help you tackle each problem systematically and maximize your performance. By organizing your thought process, reviewing the requirements carefully, and applying the right techniques, you can enhance your efficiency and accuracy while working through the questions.

One of the first steps is to understand what each question is asking. Break down complex queries into smaller, manageable parts. This way, you can focus on solving one element at a time, rather than trying to tackle everything at once. A clear, step-by-step approach will help you stay on track and avoid missing critical points.

Next, prioritize the questions based on your strengths and the time available. Start with those you are most comfortable with, and then move on to more challenging ones. This ensures that you accumulate points early on, giving you confidence and time to focus on tougher tasks later.

- Read carefully: Ensure you understand the requirements before starting each question. Look for key instructions or constraints that may guide your solution.

- Break it down: Split the problem into smaller tasks. This will allow you to approach each part methodically.

- Time management: Allocate your time wisely. Don’t spend too long on any one problem, and keep track of your progress.

- Check your work: Always review your solution once you’ve completed it. This ensures you haven’t missed any details or made any errors.

Time Management During the Final Exam

Effectively managing your time during an assessment is crucial to ensure that you complete all questions within the given time limit. A well-planned approach allows you to allocate time appropriately to each section, minimizing the chances of rushing or leaving questions incomplete. It’s important to remain calm and focused, keeping track of time while also ensuring accuracy in your work.

Steps for Effective Time Allocation

One key strategy is to divide the available time based on the complexity and weight of each section. Starting with an overall plan can help you maintain a steady pace throughout the test.

| Section | Estimated Time | Details |

|---|---|---|

| Introduction/Overview | 5 minutes | Read instructions carefully and organize your strategy. |

| Easy Questions | 30% of total time | Start with the simpler questions to gain momentum and confidence. |

| Medium Complexity Questions | 40% of total time | Dedicate time to questions that require more thought but are manageable. |

| Challenging Questions | 20% of total time | Work on the hardest questions last, ensuring you have time to address them fully. |

| Review and Refinement | 5-10 minutes | Leave time for reviewing your work, ensuring everything is correct. |

Maintaining Focus and Avoiding Overthinking

Sticking to the time limits for each section is important, but it’s also crucial to avoid spending too much time on any one question. If you find yourself stuck, move on to the next one and come back later if there is time. The key is to stay calm, not overthink, and trust your preparation.

Recommended Resources for Exam Success

To excel in any assessment, it’s essential to have access to reliable resources that can guide you through the concepts and provide practice opportunities. Leveraging a mix of textbooks, online platforms, and interactive tools can significantly enhance your preparation. A good combination of theory and hands-on experience helps reinforce the material and boosts confidence.

Key Learning Materials

Various materials are available for those preparing for an evaluation, ranging from online courses to reading materials and problem-solving platforms. Below is a list of valuable resources to strengthen your knowledge:

| Resource Type | Recommended Tools | Description |

|---|---|---|

| Books | Books on Programming, Problem-Solving | Books that provide in-depth explanations and examples of the concepts covered in the course. Ideal for theoretical understanding. |

| Online Platforms | edX, Udemy, Khan Academy | These platforms offer supplementary courses, tutorials, and exercises that help reinforce learning. |

| Interactive Coding Tools | Repl.it, Jupyter Notebooks | Online coding environments where you can practice and experiment with code directly, receiving immediate feedback. |

| Practice Websites | LeetCode, HackerRank | Problem-solving websites that provide challenges and quizzes to build and test skills. |

| Communities and Forums | Stack Overflow, Reddit | Forums where learners can ask questions, discuss topics, and find solutions to problems. |

Additional Study Tips

In addition to using these resources, it’s crucial to develop good study habits. Break your learning into smaller sections, tackle one topic at a time, and schedule regular review sessions. Staying organized and maintaining consistent practice will help you feel well-prepared for any challenge.

Exploring Python Functions for Data Tasks

Functions in any programming language are essential for automating and simplifying repetitive tasks. In the context of working with large sets of information, understanding and utilizing different functions allows for more efficient processing and manipulation. From performing calculations to transforming structures, functions provide the foundation for working with complex operations.

Python offers a variety of built-in functions designed to streamline tasks such as data manipulation, aggregation, and transformation. These functions make it possible to handle large datasets more effectively and perform operations like sorting, filtering, and grouping. By mastering these built-in utilities, programmers can write more concise and efficient code, saving time and improving overall performance.

Here are some commonly used Python functions for handling tasks in this field:

- map(): A function used to apply a specified operation to each item in an iterable, simplifying transformations and modifications.

- filter(): This function helps in filtering out elements from a collection based on a defined condition, making data cleaning easier.

- reduce(): Part of the functools module, this function is helpful for performing cumulative operations, such as summing values or finding products.

- sorted(): This built-in function is used to sort any iterable in ascending or descending order, aiding in organizing information for better readability.

- zip(): A function that pairs elements from multiple sequences together, which is useful for aligning related datasets.

Using these functions not only enhances code efficiency but also leads to clearer and more organized workflows. When combined with libraries such as NumPy and Pandas, these functions become powerful tools for managing and processing collections of information effectively.

Understanding Statistical Analysis in Python

In any field involving numbers, understanding patterns and making sense of large quantities of information are essential tasks. The process of extracting meaningful insights from raw figures can be complex, but it becomes more manageable with the right tools. By applying statistical methods, we can summarize, interpret, and draw conclusions from the information in an efficient way. Using specialized functions and libraries, performing these tasks becomes both quicker and more accurate.

Statistical techniques help in uncovering trends, relationships, and hidden patterns in numerical sets, allowing professionals to make informed decisions. Python provides a wealth of libraries designed specifically for performing these operations. These tools offer a wide range of capabilities, from basic calculations to more advanced statistical models, providing flexibility for users to explore and interpret their information.

Key Techniques in Statistical Computations

There are several common techniques that are often used to perform statistical tasks, such as:

- Descriptive Statistics: This includes measures like mean, median, mode, variance, and standard deviation, which summarize the main features of a dataset.

- Correlation: Identifying the relationship between two variables to determine how one may affect the other.

- Hypothesis Testing: A method used to determine whether there is enough evidence to support a given hypothesis, through tests like t-tests or ANOVA.

- Regression Analysis: This technique helps in modeling relationships between variables, often used for prediction and trend analysis.

Python Libraries for Statistical Tasks

Python offers several libraries that are specifically designed to handle statistical computations. Some of the most commonly used ones include:

- NumPy: A powerful library for handling numerical computations and performing array operations.

- Scipy: Built on top of NumPy, this library provides additional statistical functions, including probability distributions and tests.

- Statsmodels: A library designed for statistical modeling, including regression analysis and time-series analysis.

- Pandas: Often used for data manipulation, it also has built-in methods for performing statistical operations on tabular datasets.

By mastering these techniques and tools, users can perform a wide variety of statistical tasks in an efficient manner, ultimately leading to better decision-making and deeper insights into complex sets of numbers.

Post-Exam Tips for Career Growth

After completing a rigorous assessment, it’s important to focus on the next steps that will propel your professional journey. Success in such an evaluation reflects not only your technical skills but also your ability to problem-solve, think critically, and learn independently. However, the real value lies in how you leverage that knowledge to enhance your career trajectory. By focusing on continuous improvement and applying what you’ve learned, you can unlock new opportunities and accelerate your progress.

Here are some actionable strategies to help you transition from a successful evaluation to long-term career advancement:

- Keep Learning: The learning process doesn’t stop after an assessment. Continue exploring new topics and expanding your skillset. Whether through online courses, books, or hands-on projects, staying curious and adaptable is key to remaining competitive in a fast-evolving field.

- Build a Strong Portfolio: Showcasing your practical skills is essential. Create and maintain a portfolio of projects that demonstrate your abilities. Include both personal and professional projects that highlight your expertise and problem-solving skills.

- Network Actively: Networking is a powerful tool for career growth. Attend industry events, participate in online communities, and connect with professionals in your field. Networking opens doors to collaborations, job opportunities, and mentorship.

- Seek Mentorship: Finding a mentor can accelerate your career development. Look for someone who has experience in your area of interest and can provide valuable guidance, feedback, and advice.

- Apply Your Skills in Real-World Projects: Practical experience is invaluable. Take on freelance work, internships, or volunteer opportunities to gain hands-on experience. Real-world projects allow you to refine your abilities and build a professional reputation.

- Stay Up-to-Date: The world of technology and problem-solving methodologies is constantly evolving. Follow industry news, blogs, and podcasts to stay informed about new trends, tools, and best practices.

Remember, professional growth is a continuous journey. By staying proactive and committed to self-improvement, you can transform your accomplishments into meaningful career advancements.

Why Python Is Key for Data Science

In today’s technology-driven world, one programming language stands out for its versatility, ease of use, and powerful capabilities: Python. This language has become a cornerstone for professionals working in fields that involve handling and interpreting complex information. It provides the tools and libraries needed to work efficiently across a wide range of tasks, making it an essential skill for anyone looking to pursue a career in information science, machine learning, or computational research.

Ease of Learning and Use

Python is widely known for its straightforward syntax and readability, which makes it an ideal choice for both beginners and experienced developers. Unlike other programming languages that may require steep learning curves, Python allows users to quickly grasp fundamental concepts and start writing code almost immediately. Its intuitive structure and extensive documentation make it easy to find support and resources, helping to accelerate the learning process.

Comprehensive Libraries and Frameworks

One of the most significant reasons for Python’s dominance in the field is its rich ecosystem of libraries and frameworks that extend its functionality. Libraries like NumPy, Pandas, and Matplotlib enable users to perform advanced operations on large sets of information, visualize results, and manipulate data efficiently. For more sophisticated tasks, frameworks such as Scikit-learn and TensorFlow provide powerful tools for machine learning and predictive modeling.

Python’s extensive support for third-party libraries, combined with its ability to integrate seamlessly with other tools and platforms, makes it indispensable for professionals working in fields that demand high levels of computational power and flexibility.