Machine Learning with Python Final Exam Answers

In the rapidly evolving world of technology, the ability to develop intelligent systems is becoming increasingly essential. This process involves building and fine-tuning models that can analyze complex datasets, recognize patterns, and make predictions. Gaining proficiency in these techniques can significantly enhance your problem-solving abilities, whether you’re tackling business challenges or working on academic projects.

Throughout this section, we will explore core principles, methods, and practices that are essential for anyone looking to excel in this field. By diving deep into the foundational concepts, practical approaches, and common pitfalls, you’ll be well-equipped to apply your knowledge effectively. A solid grasp of these topics is crucial for anyone aiming to succeed in evaluations or real-world applications of data science and statistical modeling.

Preparation plays a vital role in achieving proficiency. By mastering the tools and techniques available, you can confidently navigate through tasks and challenges. Whether you’re reviewing for a key assessment or improving your skills for future projects, this guide will serve as a comprehensive resource. Each section is designed to help you develop both theoretical understanding and practical expertise.

Machine Learning with Python Final Exam Answers

In this section, we focus on key concepts and techniques that are essential for mastering data analysis and predictive modeling. The process of building intelligent systems involves understanding complex patterns within data, applying appropriate algorithms, and fine-tuning models for accurate predictions. Gaining a deep understanding of these principles is crucial for tackling real-world challenges and succeeding in assessments.

By reviewing practical examples and methodologies, you will be better equipped to navigate through problem-solving scenarios effectively. These include selecting the right tools, optimizing performance, and evaluating model outcomes. A solid foundation in these topics ensures that you can address various challenges and successfully demonstrate your skills in academic or professional settings.

Additionally, this guide emphasizes the importance of preparation and practice. Through repeated exposure to key tasks and common pitfalls, you will be able to confidently approach any task. Mastering these techniques is not only beneficial for exams but also essential for applying data-driven insights in a variety of industries.

Overview of Machine Learning Concepts

Understanding how algorithms can extract meaningful insights from large datasets is essential for developing automated systems capable of making informed decisions. The process involves several core principles, including data preparation, model selection, and performance evaluation. Gaining a solid grasp of these fundamentals forms the foundation for tackling more advanced techniques and ensuring successful outcomes in practical applications.

Core Components of Data Analysis Systems

Effective data analysis relies on several critical stages, each contributing to the overall success of predictive modeling. The key steps include:

| Stage | Description |

|---|---|

| Data Collection | Gathering relevant and high-quality data for analysis. |

| Data Preprocessing | Cleaning and transforming data to ensure accuracy and consistency. |

| Model Development | Creating and training algorithms to recognize patterns and make predictions. |

| Model Evaluation | Assessing model performance using metrics such as accuracy and precision. |

Key Techniques in Data Modeling

Once the data is prepared, the next step involves selecting the appropriate technique for analysis. Several popular approaches are commonly used, each suited for different types of problems:

| Technique | Application |

|---|---|

| Regression | Predicting continuous values based on input variables. |

| Classification | Categorizing data into distinct classes or groups. |

| Clustering | Grouping similar data points without predefined labels. |

| Dimensionality Reduction | Reducing the number of variables to simplify analysis. |

Understanding these core principles is essential for building effective systems that can analyze data and make predictions. These foundational concepts lay the groundwork for mastering more advanced techniques and optimizing model performance.

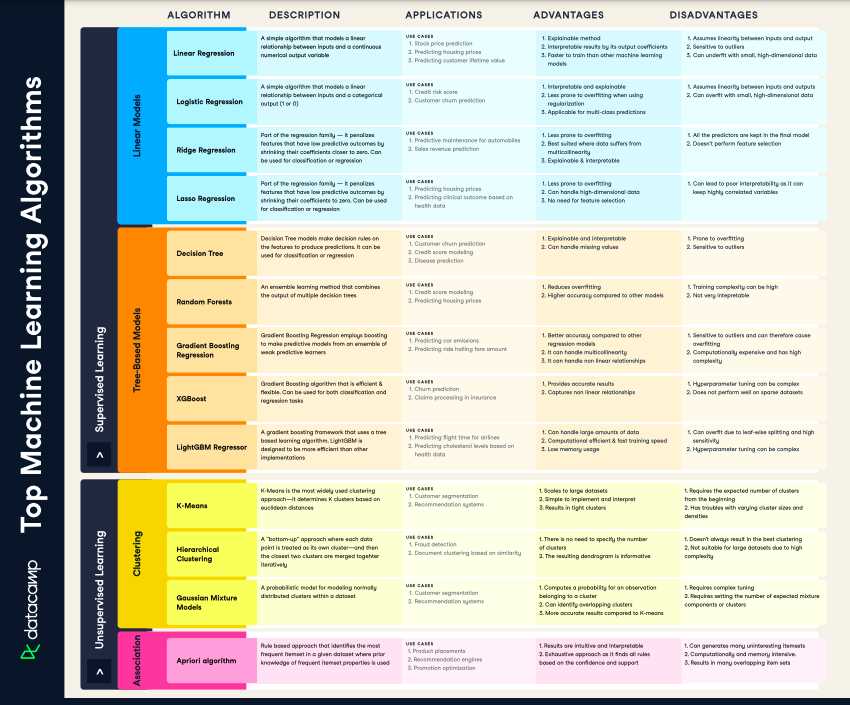

Key Algorithms in Python for ML

When developing intelligent systems, selecting the right algorithm is crucial for extracting meaningful insights from data. Various techniques are designed to tackle different types of problems, from classification tasks to regression analysis. Understanding the core algorithms and their applications allows you to choose the most effective approach for each scenario. In this section, we explore some of the most important algorithms commonly used in data modeling.

Popular Algorithms for Predictive Modeling

There are several widely used algorithms that can be applied depending on the problem at hand. Each one has its own strengths, making it suitable for different types of data and goals. Below are some of the key algorithms:

- Linear Regression: Used for predicting continuous values based on linear relationships between variables.

- Logistic Regression: A technique for binary classification problems, helping to predict the likelihood of an event occurring.

- Decision Trees: These algorithms are useful for both classification and regression tasks, offering simple and interpretable models.

- Random Forest: An ensemble method that uses multiple decision trees to improve accuracy and reduce overfitting.

- K-Nearest Neighbors (KNN): A non-parametric algorithm used for classification based on the closest data points.

- Support Vector Machines (SVM): This method is effective for classification tasks, finding the optimal boundary between classes.

Advanced Techniques for Complex Problems

For more intricate datasets or complex problems, advanced algorithms may be required to achieve optimal results. These include:

- Neural Networks: Models inspired by the human brain, capable of handling highly complex patterns and large-scale data.

- Gradient Boosting Machines (GBM): An ensemble method that builds multiple weak models to create a powerful predictive system.

- K-Means Clustering: A popular unsupervised learning technique for grouping data into clusters based on similarity.

- Principal Component Analysis (PCA): A dimensionality reduction technique that simplifies datasets by transforming them into principal components.

Mastering these algorithms is essential for anyone looking to excel in data analysis. By understanding their strengths and weaknesses, you can select the best approach for solving different types of problems, ultimately improving the efficiency and accuracy of your models.

Understanding Data Preprocessing Techniques

Before building any predictive models, it is essential to ensure that the data is in a format suitable for analysis. Raw data often contains inconsistencies, missing values, or irrelevant information that can negatively impact the performance of algorithms. Preprocessing techniques are employed to clean, transform, and organize the data, making it more suitable for model training and evaluation. These steps are critical in obtaining accurate and reliable results from any dataset.

Common Data Transformation Methods

Data transformation involves converting the raw data into a usable form. Below are some common methods used in preprocessing:

| Transformation | Purpose |

|---|---|

| Normalization | Scaling numerical data to a consistent range, ensuring all features have equal importance. |

| Standardization | Adjusting the data to have a mean of 0 and a standard deviation of 1, useful for algorithms sensitive to feature scaling. |

| Encoding Categorical Data | Converting non-numeric variables into numeric values, which is essential for many algorithms. |

| Handling Missing Values | Filling in or removing missing data points to avoid bias or incorrect model predictions. |

Techniques for Cleaning and Preparing Data

In addition to transformation, data cleaning plays a vital role in preparing the dataset for analysis. The most common cleaning methods include:

- Outlier Detection: Identifying and removing or adjusting extreme values that may distort analysis.

- Data Imputation: Filling in missing values using various techniques, such as mean or median substitution, or more advanced methods like regression imputation.

- Feature Selection: Removing irrelevant or redundant features to reduce complexity and improve model performance.

By implementing these techniques, you ensure that the dataset is accurate, consistent, and ready for use in any modeling task. Proper data preprocessing is a critical step that can significantly enhance the effectiveness of predictive models.

Building Models with Python Libraries

Constructing predictive models requires not only an understanding of algorithms but also the use of powerful tools that can streamline the development process. Libraries provide pre-built functions and classes that simplify the implementation of complex tasks, allowing for faster development and better performance. In this section, we will explore how various libraries can be leveraged to build robust models, handle data, and evaluate results effectively.

Several popular libraries are widely used for data analysis, model creation, and evaluation. These libraries offer extensive functionality, from basic statistical operations to complex machine learning techniques. By mastering these libraries, developers and data scientists can save time, reduce errors, and enhance the accuracy of their models.

Key Libraries for Model Development:

- Scikit-learn: This is one of the most commonly used libraries for implementing classical algorithms. It provides easy-to-use tools for regression, classification, clustering, and more.

- TensorFlow: A comprehensive library for deep learning, used to build and train neural networks. It supports a variety of architectures and is highly scalable.

- Keras: A user-friendly library built on top of TensorFlow, simplifying the process of designing and training deep neural networks.

- PyTorch: Another powerful deep learning framework that is known for its flexibility and ease of use, especially in research and prototyping.

- Pandas: Although not directly involved in model building, Pandas is essential for data manipulation and cleaning, providing efficient structures for handling large datasets.

- Matplotlib and Seaborn: These visualization libraries are crucial for understanding the relationships in data and assessing model performance through charts and plots.

By utilizing these libraries, developers can efficiently build models, streamline their workflow, and ensure they are using the best practices for optimal results. Understanding how to leverage these tools is crucial for anyone looking to succeed in data-driven projects or assessments.

Evaluating Model Performance in Python

Once a model is built, it is essential to assess its performance to ensure that it meets the desired accuracy and generalizes well to new data. Evaluation allows you to understand how well the model is making predictions and where improvements might be needed. There are various metrics and techniques available to measure a model’s effectiveness, each suited for different types of tasks and objectives. A proper evaluation process is critical for achieving reliable results and refining the model.

Common Evaluation Metrics: Different types of tasks–such as classification, regression, and clustering–require different metrics to evaluate the model. Some of the key metrics include:

- Accuracy: The percentage of correct predictions made by the model, commonly used for classification problems.

- Precision and Recall: Precision measures the correctness of positive predictions, while recall measures the ability to identify all relevant cases.

- F1-Score: The harmonic mean of precision and recall, providing a balanced evaluation for imbalanced datasets.

- Mean Squared Error (MSE): A common metric for regression tasks, it measures the average squared difference between predicted and actual values.

- R-Squared: A metric used in regression analysis to determine how well the model fits the data. It represents the proportion of the variance in the dependent variable that is predictable from the independent variables.

Cross-Validation: Another essential technique is cross-validation, which involves splitting the dataset into multiple subsets and training the model on different combinations of these subsets. This helps ensure that the model performs consistently and is not overfitting to one particular portion of the data. The most common form is k-fold cross-validation, where the data is divided into ‘k’ parts and the model is trained ‘k’ times, each time using a different subset for testing.

Visualization of Results: Visual tools like confusion matrices and ROC curves also play an important role in model evaluation. They provide insights into the types of errors the model is making and allow for a deeper understanding of its strengths and weaknesses.

Evaluating model performance is a continuous process. By regularly applying these metrics and techniques, you can ensure that your model is both accurate and reliable, and make informed decisions about potential adjustments to improve its predictive capabilities.

Common Mistakes in Machine Learning

Building effective predictive models is a complex task, and it’s easy to make mistakes along the way. Even experienced developers can encounter pitfalls that hinder model performance or lead to incorrect conclusions. These errors often stem from misunderstanding the data, improperly tuning models, or using inappropriate evaluation methods. Identifying and avoiding common mistakes is essential for improving the reliability and accuracy of any project.

1. Overfitting the Model: One of the most frequent mistakes is overfitting, where the model becomes too closely aligned with the training data. This means it performs very well on the training set but struggles to generalize to new, unseen data. This can happen when the model is overly complex or when there is not enough data to support the complexity.

2. Ignoring Data Quality: Raw data is rarely clean, and skipping proper preprocessing steps can lead to flawed results. Failing to handle missing values, remove outliers, or normalize data can significantly impact model performance. It’s crucial to spend time cleaning and transforming the data before feeding it into the model.

3. Not Understanding the Algorithms: Using algorithms without understanding how they work or their limitations can lead to suboptimal performance. It’s important to know the strengths and weaknesses of each method and to choose the right one for the specific problem you are solving.

4. Failing to Evaluate Properly: Another common mistake is relying on a single evaluation metric or not properly validating the model. For example, accuracy alone can be misleading, especially in imbalanced datasets. It’s important to use appropriate metrics (e.g., precision, recall, F1-score) and to apply cross-validation techniques to get a comprehensive view of the model’s performance.

5. Neglecting Feature Engineering: Often, data features are not as informative as they could be. Failing to properly engineer new features or select the most relevant ones can result in poor model performance. Effective feature extraction and selection can make a significant difference in the quality of predictions.

Avoiding these common pitfalls is essential for creating robust and reliable models. By understanding these mistakes, developers can take proactive steps to improve their process, resulting in better outcomes and more accurate predictions.

Optimizing Hyperparameters in Python

Improving model performance often involves adjusting various parameters that control how algorithms learn from data. These parameters, known as hyperparameters, can significantly impact the outcome of a model. Tuning these settings optimally requires a systematic approach to find the best values that maximize accuracy or minimize error. The process of hyperparameter optimization plays a critical role in improving model performance and ensuring robust predictions.

Methods for Hyperparameter Optimization:

- Grid Search: One of the most common methods, grid search involves testing a predefined set of hyperparameter values and selecting the best combination. While thorough, this approach can be computationally expensive, especially with large datasets and many parameters.

- Random Search: Instead of testing every possible combination, random search selects random hyperparameter values within a given range. This method is often more efficient than grid search and can provide good results with fewer trials.

- Bayesian Optimization: This method uses a probabilistic model to predict which hyperparameter values will yield the best performance. It is more efficient than grid and random search, as it focuses on promising regions of the hyperparameter space.

- Genetic Algorithms: This approach uses principles of natural selection to evolve a population of hyperparameter configurations. Over time, the algorithm converges on the best values, making it suitable for complex models.

Important Hyperparameters to Consider:

- Learning Rate: Determines the step size during optimization. A too high learning rate may cause the model to overshoot, while a too low learning rate can make convergence slow.

- Batch Size: Refers to the number of training samples used in one iteration. A larger batch size can speed up training, but may reduce model accuracy.

- Number of Layers and Neurons: In deep learning models, adjusting the number of layers or neurons per layer can affect the model’s ability to learn complex patterns.

- Regularization Parameters: Regularization helps prevent overfitting by penalizing large model coefficients. Tuning the strength of regularization is crucial for achieving a balance between underfitting and overfitting.

Tips for Efficient Hyperparameter Tuning:

- Start with a smaller range for hyperparameters and progressively explore larger values.

- Use cross-validation to evaluate model performance on different subsets of the data to avoid overfitting.

- Leverage parallel computing to speed up the optimization process, especially when testing multiple combinations of hyperparameters.

Optimizing hyperparameters is an iterative and often time-consuming process, but the results are crucial for maximizing model performance. By applying these techniques, you can refine your models and achieve better results on your tasks.

Feature Engineering for Better Accuracy

The quality of a model’s input data is often the deciding factor in its performance. By transforming raw data into meaningful features, you can improve the model’s ability to identify patterns and make accurate predictions. Feature engineering involves selecting, modifying, or creating new variables that enhance the model’s predictive power. This process is essential for achieving high accuracy, especially in complex tasks.

Common Feature Engineering Techniques:

- Handling Missing Values: Missing data is a common issue in real-world datasets. Techniques such as imputation or removing missing values can be applied to ensure that the model has complete information.

- Encoding Categorical Variables: Categorical features must be converted into numerical values for the model to process them effectively. Methods like one-hot encoding or label encoding are commonly used for this purpose.

- Feature Scaling: Some models are sensitive to the scale of the input features. Normalizing or standardizing data can ensure that features with different ranges do not dominate the learning process.

- Polynomial Features: Creating higher-order terms of numerical features can help capture non-linear relationships within the data. Polynomial features are especially useful for linear models.

- Feature Extraction: This technique involves creating new features from existing ones, such as extracting statistical summaries (mean, median, variance) or domain-specific transformations that may reveal hidden patterns.

Feature Selection:

While creating new features is important, it is equally crucial to identify and retain only the most relevant features. Irrelevant or redundant features can lead to overfitting and reduce the model’s generalization ability. Feature selection methods such as Recursive Feature Elimination (RFE) or tree-based methods like Random Forest can help in selecting the most impactful features.

Feature Importance Table:

| Feature Selection Method | Description |

|---|---|

| Recursive Feature Elimination (RFE) | A method that recursively removes the least important features based on model performance. |

| Random Forest Feature Importance | Uses decision trees to estimate the importance of each feature based on how it improves split quality. |

| Principal Component Analysis (PCA) | A dimensionality reduction technique that transforms features into a smaller set of uncorrelated components. |

By investing time in effective feature engineering, you can significantly enhance the accuracy of your models. This iterative process involves understanding your data, experimenting with different techniques, and refining the features to achieve the best possible performance.

Handling Imbalanced Datasets in Python

In many real-world datasets, the distribution of classes is often skewed, with some classes being significantly underrepresented. This imbalance can lead to biased models that are more likely to predict the majority class, ignoring the minority class. Addressing this issue is crucial for building accurate and fair models. By implementing appropriate techniques, you can ensure that your model learns to recognize patterns in both the majority and minority classes effectively.

Techniques for Handling Imbalanced Data:

- Resampling: This involves adjusting the size of the classes to make them more balanced. Two common approaches are oversampling the minority class and undersampling the majority class. Oversampling replicates instances of the minority class, while undersampling reduces the number of instances in the majority class.

- SMOTE (Synthetic Minority Over-sampling Technique): Instead of simply replicating minority class samples, SMOTE generates synthetic data points by interpolating between existing minority class instances. This helps in enriching the feature space without overfitting the model.

- Class Weights Adjustment: Many algorithms allow for adjusting the weight of each class during training. By assigning a higher weight to the minority class, the model will give more importance to correctly predicting those instances, leading to better performance on the underrepresented class.

- Ensemble Methods: Techniques such as Bagging and Boosting can improve model performance in imbalanced datasets. Methods like Random Forest and XGBoost often include built-in mechanisms to handle imbalance, such as adjusting class weights or resampling during training.

- Anomaly Detection: When the minority class is extremely rare, it can be treated as an anomaly detection problem. This technique focuses on identifying outliers or rare events that deviate significantly from the majority class.

Choosing the right technique depends on the nature of the dataset and the problem at hand. It is essential to evaluate the model’s performance using appropriate metrics such as precision, recall, and the F1-score to ensure that both classes are being predicted effectively.

By properly addressing class imbalance, you can create more robust models that are better suited for real-world applications, where balanced decision-making is often crucial.

Understanding Overfitting and Underfitting

When developing predictive models, it is essential to find the right balance between capturing patterns in the data and maintaining the model’s ability to generalize to unseen data. Two common issues that arise in this process are overfitting and underfitting. Both of these problems can lead to poor model performance, but they are caused by different factors. Understanding these issues and knowing how to address them is key to building accurate and reliable models.

Overfitting

Overfitting occurs when a model learns the noise or irrelevant details in the training data to the point that it performs well on the training set but poorly on new, unseen data. Essentially, the model becomes too complex, capturing patterns that are not representative of the overall population. As a result, it fails to generalize well.

- Signs of Overfitting:

- High performance on training data but poor performance on test data.

- Model complexity is excessively high (e.g., too many parameters or features).

- Model is overly sensitive to small variations in the training data.

- How to Address Overfitting:

- Simplify the model by reducing the number of features or parameters.

- Use techniques like cross-validation to assess model performance more reliably.

- Regularize the model by adding penalties for overly complex solutions (e.g., L1, L2 regularization).

- Increase the size of the training dataset to help the model better generalize.

Underfitting

Underfitting occurs when a model is too simple to capture the underlying patterns in the data. This typically happens when the model is not complex enough or when insufficient training has been done. As a result, the model fails to learn the relationships between the features and the target variable, leading to poor performance both on the training data and test data.

- Signs of Underfitting:

- Poor performance on both training and test data.

- Model is too simplistic (e.g., using linear models for highly complex relationships).

- Features are not being fully utilized or are irrelevant to the problem at hand.

- How to Address Underfitting:

- Increase model complexity by adding more features or using more sophisticated algorithms.

- Provide more training data to allow the model to learn better patterns.

- Reduce regularization, as overly strong regularization can cause the model to be too simple.

By understanding the nuances of overfitting and underfitting, you can create more balanced and effective models. The key is to strike the right balance between model complexity and generalization, ensuring that your model performs well not just on the training data but also on new, unseen data.

Implementing Neural Networks in Python

Creating advanced models to mimic human brain functions requires constructing networks that can process and learn from complex data. This section explores how to develop and implement these networks using popular tools and frameworks. These models have applications in various fields, from image recognition to natural language processing. By following the right steps, you can build a neural network that efficiently handles data and provides valuable insights.

Building the Network

The first step in constructing a network is defining its architecture. This involves selecting the number of layers, the number of neurons in each layer, and how they are interconnected. Several frameworks are available to simplify this process, with TensorFlow and Keras being some of the most widely used. Here is a general approach to building a neural network:

- Choose a framework: Select a tool like TensorFlow, Keras, or PyTorch for building the network.

- Define the architecture: Decide on the number of layers and the types of neurons (e.g., fully connected, convolutional).

- Initialize weights: Initialize the weights and biases of each neuron with appropriate values.

- Activation functions: Apply activation functions such as ReLU or Sigmoid to introduce non-linearity into the network.

Training the Model

Once the architecture is established, the next crucial step is training the model on your data. During training, the model adjusts its internal parameters to minimize the error or loss, improving its ability to make predictions. The following steps outline the training process:

- Feed data: Provide the network with labeled data, separating it into training and validation sets.

- Optimization algorithm: Choose an optimization algorithm like stochastic gradient descent (SGD) or Adam to adjust weights and minimize loss.

- Loss function: Select a loss function like mean squared error (MSE) or cross-entropy to measure prediction accuracy.

- Backpropagation: Use the backpropagation technique to update the weights based on the gradients calculated during the forward pass.

By repeating this process over multiple iterations, the network becomes more accurate in its predictions, eventually reaching a point of convergence where the performance is stable.

Evaluating the Model

After training, it is essential to evaluate the model’s performance using a separate test set. This helps in understanding how well the network generalizes to new, unseen data. Common evaluation metrics include:

- Accuracy: The percentage of correct predictions out of the total predictions made.

- Precision and Recall: Measures used for imbalanced datasets to evaluate the model’s performance on positive classes.

- F1 Score: A balance between precision and recall, offering a single metric for model performance.

By thoroughly testing the model and analyzing its performance, you can make necessary adjustments to enhance its accuracy and efficiency.

Using Cross-Validation for Model Evaluation

To ensure a model generalizes well to new, unseen data, it is crucial to evaluate its performance thoroughly. Cross-validation is a technique used to assess how well a model will perform on an independent dataset by dividing the available data into multiple subsets. This method provides a more reliable estimate of the model’s accuracy and helps in avoiding biases due to overfitting or underfitting.

How Cross-Validation Works

Cross-validation involves splitting the dataset into multiple parts, or folds, where each fold serves as a testing set while the remaining parts are used for training. This process is repeated for each fold, ensuring that every data point is used for both training and testing. The average performance across all folds is then calculated to provide a final evaluation metric.

- Split the data: Divide the dataset into K equal-sized parts, known as folds.

- Training and Testing: For each fold, train the model on K-1 folds and test it on the remaining fold.

- Repeat: This process is repeated for all folds, ensuring that each part of the data is used for both training and testing.

- Calculate performance: The results from all folds are averaged to obtain a final performance metric, such as accuracy or F1 score.

Types of Cross-Validation

There are different variations of cross-validation, each suited to specific types of datasets and tasks:

- K-Fold Cross-Validation: The most common approach, where the data is split into K folds, and the process is repeated K times.

- Stratified K-Fold: Similar to K-fold but ensures that each fold has a proportionate representation of each class, ideal for imbalanced datasets.

- Leave-One-Out Cross-Validation (LOOCV): A special case where K equals the number of data points, and each data point is used once as the test set.

- Group K-Fold: Used when data points are grouped (e.g., by category or subject), ensuring that all data points in a group are either in the training or testing set, not split between them.

Benefits of Cross-Validation

Using cross-validation provides several advantages for model evaluation:

- Improved accuracy estimation: By averaging performance across multiple folds, cross-validation reduces the risk of biased results from a single training/test split.

- Better model selection: Cross-validation helps in comparing different models and selecting the best-performing one.

- Helps in preventing overfitting: It ensures that the model does not rely too heavily on a specific subset of the data, making it more robust and generalizable.

By applying cross-validation, you gain a better understanding of how well your model will perform when faced with real-world data. It also provides valuable insights into potential weaknesses, allowing for improvements before deployment.

Working with Unsupervised Learning Techniques

Unsupervised methods are used to uncover hidden patterns in data without predefined labels or outputs. These techniques allow for the discovery of inherent structures, clusters, or relationships within the dataset, enabling valuable insights from raw, unclassified data. They are often employed when there is little or no labeled data available, and the goal is to explore the underlying data distribution.

Common Approaches in Unsupervised Techniques

Several key approaches are commonly used in unsupervised tasks, each designed to address specific types of problems. The most popular methods include:

- Clustering: This approach groups similar data points together based on certain characteristics. The most widely used clustering techniques include K-means, DBSCAN, and hierarchical clustering.

- Dimensionality Reduction: Reducing the number of variables while maintaining the essential structure of the data. Techniques like Principal Component Analysis (PCA) and t-SNE are frequently employed.

- Anomaly Detection: Identifying rare or unusual patterns in the data that do not conform to the expected behavior. This is useful for fraud detection or identifying outliers in datasets.

Applications of Unsupervised Techniques

These methods are used in various fields where labeled data is sparse or unavailable. Some key areas of application include:

- Customer segmentation: Grouping customers based on purchasing behavior or demographics to tailor marketing efforts.

- Image compression: Reducing the complexity of image data without losing essential visual information.

- Natural language processing: Grouping similar words or phrases in text data for tasks like topic modeling and sentiment analysis.

- Anomaly detection in sensor data: Identifying faulty sensor readings or equipment failures in industrial settings.

By leveraging these unsupervised techniques, valuable patterns and structures can be extracted from datasets, which might otherwise remain hidden in large, unstructured data collections.

Testing and Debugging Python Code for ML

Ensuring the correctness and efficiency of code is a critical part of developing models for data analysis. Proper testing and debugging allow developers to identify and fix errors, ensuring that algorithms run smoothly and produce reliable results. This process is essential for building robust solutions and improving the overall performance of data-driven projects.

Key Techniques for Testing Code

Testing involves verifying that the code behaves as expected under various conditions. Common techniques include:

- Unit Testing: Writing small tests to verify individual functions or components of the code. Python’s built-in unittest framework helps automate this process.

- Integration Testing: Testing how different parts of the code interact with each other, ensuring that components work well when combined.

- Regression Testing: Verifying that new changes to the code do not introduce unexpected errors or negatively affect existing functionality.

- Cross-validation: Using techniques to split data into training and testing sets to ensure that the model performs well across different subsets of data.

Effective Debugging Strategies

Debugging helps locate and fix issues that prevent the code from functioning as intended. Some common approaches include:

- Using Print Statements: Inserting print statements throughout the code to monitor the values of variables and trace execution flow.

- Using a Debugger: Tools like pdb allow developers to pause execution, inspect variables, and step through the code line by line to identify problems.

- Isolating the Problem: Narrowing down the issue by isolating sections of the code, commenting out parts, or using smaller test cases to simplify debugging.

- Checking for Common Errors: Ensuring that common issues such as index out-of-bounds, type mismatches, and data input errors are handled appropriately.

By employing rigorous testing and debugging techniques, developers can ensure that their models run effectively and produce accurate results, leading to more successful projects in data analysis and algorithm development.

Applying ML to Real-World Problems

In today’s world, the application of advanced computational techniques to solve complex challenges is becoming increasingly common. By analyzing vast amounts of data, it’s possible to gain insights that were once unattainable. These approaches can be applied across industries, from healthcare to finance, to optimize processes, improve decision-making, and create smarter systems that adapt to real-world environments.

Real-world scenarios often involve noisy, incomplete, and unstructured data. Tackling such problems requires a deep understanding of the underlying data, as well as the ability to select appropriate methods that best address the specific challenge. In these cases, it’s essential to adapt models to work effectively within the constraints of real-world situations while balancing computational efficiency and accuracy.

Healthcare and Medical Applications

One of the most impactful uses of advanced algorithms is in healthcare, where data from medical records, diagnostic images, and patient monitoring systems is analyzed to detect diseases, predict outcomes, and suggest treatments. These techniques have shown promise in areas like:

- Predictive Diagnostics: Identifying early signs of diseases such as cancer, diabetes, or heart disease based on patterns found in patient data.

- Medical Imaging: Using algorithms to process and analyze X-rays, MRIs, and CT scans, aiding doctors in making more accurate diagnoses.

- Personalized Medicine: Tailoring treatments to individual patients by analyzing their genetic makeup and health history.

Finance and Business Insights

In finance, computational models can analyze trends, detect fraud, and forecast market behavior. Businesses use these methods to gain a competitive edge by improving customer experience, optimizing supply chains, and making data-driven decisions. Some examples include:

- Fraud Detection: Identifying unusual spending patterns or transactions that may indicate fraudulent activities.

- Customer Segmentation: Grouping customers based on purchasing behavior, allowing for targeted marketing strategies and personalized offers.

- Stock Market Prediction: Analyzing past data to make informed predictions about future market movements.

Challenges and Considerations

Despite the promise, there are several challenges to applying these methods in the real world. Issues such as data privacy concerns, the need for large volumes of high-quality data, and the interpretability of models must be considered. Moreover, not all problems can be solved by computational methods alone–human expertise is often required to make the final decisions based on model outputs.

When deployed correctly, advanced computational methods can bring about transformative change, driving efficiencies and innovation in a wide range of industries. However, their successful application requires careful planning, iterative development, and ongoing refinement based on real-world feedback.

Final Exam Preparation Tips for Success

Preparing effectively for an important assessment is crucial to achieving success. A well-organized approach to studying not only helps in retaining knowledge but also boosts confidence during the actual test. Adopting a structured strategy, practicing consistently, and reviewing key concepts are some of the essential steps to ensure readiness for any challenging evaluation.

By focusing on mastering the core principles, organizing study materials, and simulating test conditions, you can significantly improve your performance. Additionally, staying calm, managing time efficiently, and understanding the format of the assessment are vital for performing under pressure. Below are some valuable tips to guide you through your preparation.

Key Tips for Effective Preparation

| Tip | Description |

|---|---|

| Start Early | Begin studying well in advance to avoid cramming at the last minute. This allows ample time to absorb and understand complex topics. |

| Organize Your Study Schedule | Create a daily timetable that breaks down tasks into manageable chunks. Prioritize topics based on difficulty and importance. |

| Practice Regularly | Reinforce your understanding by solving practice problems, taking mock tests, or reviewing previous quizzes. This helps familiarize you with the format. |

| Review Key Concepts | Focus on fundamental concepts and key formulas. Ensure you have a solid grasp of the basics before moving to advanced topics. |

| Stay Calm and Confident | Keep a positive mindset and manage stress. A calm, focused approach can enhance your ability to recall information accurately. |

Effective Study Resources

In addition to textbooks and class notes, various supplementary materials can aid in the preparation process. These resources can provide different perspectives on the material, allowing for deeper understanding.

- Online Courses: Many platforms offer free and paid courses that explain complex topics in detail.

- Study Groups: Collaborating with peers can help clarify doubts and reinforce learning through discussions and problem-solving.

- Flashcards: A useful tool for memorizing key terms and concepts, especially when preparing for assessments that require quick recall.

By following these strategies and maintaining consistency in your approach, you will increase your chances of achieving success on the test. A well-prepared candidate is not only knowledgeable but also confident and composed under examination conditions.