Database Management Exam Questions and Answers

To succeed in the field of information systems, understanding core concepts is essential. Comprehensive knowledge of how data is structured, processed, and secured will greatly enhance your ability to tackle complex topics. With the right approach, you can prepare effectively for any related challenge.

Focusing on key principles like system architecture, query optimization, and ensuring data integrity allows for a solid foundation. Being familiar with common tools and techniques used in the industry will give you an edge in applying theoretical knowledge to practical scenarios.

Whether you are preparing for an assessment or striving to strengthen your understanding, revisiting essential topics and practicing problem-solving will set you up for success. Equip yourself with the necessary skills to confidently handle any challenge that comes your way in this field.

Database Management Exam Questions and Answers

Success in any assessment of this field requires a deep understanding of the essential principles involved in organizing, securing, and manipulating large sets of information. Knowing how to approach complex scenarios and tackle common problems is key. This section will guide you through critical areas that are often tested and provide insight into how to effectively prepare.

Core Concepts to Master

Focusing on fundamental concepts is crucial. Topics such as data integrity, system architecture, and query optimization regularly appear in assessments. By understanding how these elements work together, you can approach problem-solving with greater confidence. Make sure to practice the core skills related to these concepts to reinforce your knowledge.

Practical Skills for Problem Solving

In addition to theory, practical experience is invaluable. Familiarity with key tools and techniques used in this field can make a significant difference. Exercises that simulate real-world scenarios will help you become adept at solving complex tasks efficiently. Be sure to review typical problems and their solutions to get a clear picture of what to expect.

Key Concepts in Database Management

To excel in the field of information systems, it’s crucial to have a solid grasp of the fundamental principles that govern how data is structured, stored, and accessed. Mastering these concepts will not only improve problem-solving skills but also lay the foundation for understanding more advanced topics. This section highlights some of the most essential ideas that are often tested in assessments and real-world applications.

Core Principles to Understand

The following concepts are vital for anyone seeking to deepen their knowledge and expertise in the field:

- Data Integrity – Ensuring accuracy and consistency of information across systems.

- Normalization – Organizing data to minimize redundancy and improve efficiency.

- Relational Models – Structures that define how data is linked and accessed in a system.

- Security Protocols – Measures to protect sensitive information from unauthorized access.

Key Techniques for Effective Handling

Building practical knowledge in the following areas will further enhance understanding:

- Query Optimization – Techniques to improve the speed and efficiency of data retrieval.

- Backup and Recovery – Processes to safeguard information and restore it in case of loss.

- Indexing – Creating efficient ways to search and retrieve data quickly.

- Transaction Management – Handling changes to ensure system consistency and prevent errors.

Types of Databases and Their Uses

Different systems are designed to store and process data in unique ways, depending on the needs of a given application. Understanding the various types of structures available is essential for selecting the right solution for a particular task. This section provides an overview of the main system types and how they are used in practice.

| Type | Description | Common Uses |

|---|---|---|

| Relational | Stores data in tables with predefined relationships between them. | Financial systems, customer records, inventory tracking |

| NoSQL | Non-relational, designed for large-scale, distributed data storage. | Social media platforms, real-time analytics, big data |

| Graph | Optimized for data with complex relationships, represented as nodes and edges. | Social networks, recommendation engines, fraud detection |

| Object-Oriented | Data is represented as objects, similar to object-oriented programming. | Engineering applications, multimedia systems, CAD/CAM |

| Hierarchical | Organizes data in a tree-like structure with parent-child relationships. | XML data storage, telecommunications, directory services |

Understanding Database Architecture Basics

Grasping the foundational structure of any information system is crucial for effective utilization and troubleshooting. The organization of data, how it is accessed, and the processes that ensure consistency and efficiency are core components of these systems. This section covers the fundamental architecture elements that make up a robust system, enabling efficient data storage, retrieval, and management.

At its core, a well-designed structure should include several key layers that work together seamlessly. These layers define how information is stored, how users interact with it, and how systems handle requests and updates. By understanding these basic building blocks, one can navigate the complexities of different systems and solve problems more effectively.

Common Database Management Tools

Various tools are available to help manage and interact with information systems, each designed to simplify tasks like data organization, retrieval, and analysis. These tools enable users to handle complex tasks efficiently, whether it’s querying information, optimizing performance, or maintaining security. Understanding the features and functionalities of these tools is crucial for anyone working in this field.

Essential Tools for Everyday Use

Here are some widely used tools that are integral to handling data effectively:

| Tool | Description | Primary Use |

|---|---|---|

| MySQL | An open-source relational management system known for its speed and reliability. | Web applications, e-commerce platforms |

| Microsoft SQL Server | A comprehensive system offering advanced data storage and processing capabilities. | Enterprise applications, business intelligence |

| Oracle | A powerful platform designed for large-scale and complex data operations. | Financial services, large-scale data analysis |

| MongoDB | A NoSQL platform known for its flexibility and scalability with unstructured data. | Big data, real-time applications |

| PostgreSQL | An advanced open-source system known for its extensibility and robustness. | Geospatial applications, data analytics |

Tools for Optimizing Performance

Performance optimization is crucial for efficient operations. Below are tools that enhance system efficiency:

| Tool | Description | Primary Use |

|---|---|---|

| Navicat | A comprehensive management and design tool for various database systems. | Query optimization, data modeling |

| Toad | A powerful tool for SQL query development and performance tuning. | SQL optimization, troubleshooting |

| phpMyAdmin | A web-based tool for managing MySQL databases with an easy-to-use interface. | Database administration, query execution |

| DBVisualizer | An universal database tool that supports various platforms and helps with visual data exploration. | Query building, performance monitoring |

Important SQL Commands for Exams

Mastering the core commands used to interact with information systems is essential for anyone looking to demonstrate their knowledge in this field. These commands form the foundation for querying, updating, and managing large volumes of data. In this section, we will cover the most frequently used commands that are crucial for working with structured systems, helping you prepare for both practical tasks and assessments.

Familiarity with commands such as SELECT, INSERT, UPDATE, and DELETE is critical. Understanding how to filter, sort, and manipulate data will not only help in assessments but also in real-world applications. Below are some of the most important commands and their use cases.

Normalization and Denormalization Explained

Organizing data effectively is key to ensuring efficiency, consistency, and flexibility in any system. Two fundamental techniques used to handle the arrangement of information are normalization and denormalization. These processes impact how data is stored, accessed, and updated, affecting performance and scalability. In this section, we’ll explain both techniques and their respective advantages and drawbacks.

What is Normalization?

Normalization is the process of structuring information in a way that reduces redundancy and dependency. By breaking down large sets of information into smaller, logically organized tables, this approach helps ensure that data is stored efficiently and that each piece of information is represented only once. This minimizes anomalies during updates and makes it easier to maintain data integrity.

What is Denormalization?

Denormalization, on the other hand, involves combining tables or adding redundancy to speed up query performance in certain situations. While this can lead to increased storage requirements, it can also improve read performance by reducing the need for complex joins. Denormalization is often used when quick retrieval of data is more critical than avoiding redundancy.

Database Security: Key Questions

Ensuring the safety of stored information is critical for any organization. The protection of sensitive data requires a multi-layered approach that addresses various vulnerabilities. In this section, we explore key aspects of securing information, focusing on the most important considerations that need to be addressed for safeguarding data from unauthorized access and potential breaches.

Key Areas of Concern

When considering the security of a system, several critical aspects need to be evaluated:

- Authentication – How can users be verified before gaining access?

- Authorization – What level of access should be granted to different users?

- Encryption – How is sensitive data protected from unauthorized visibility?

- Auditing – How can activities be tracked and monitored for potential threats?

- Backup Security – What steps are in place to protect backup data from tampering or theft?

Common Security Practices

Here are some essential techniques used to ensure the integrity and confidentiality of sensitive information:

- Role-based Access Control (RBAC) – Restricting access based on user roles to minimize unnecessary exposure.

- Data Masking – Concealing sensitive information while maintaining usability in non-production environments.

- Two-factor Authentication (2FA) – Adding an extra layer of security by requiring two forms of verification for access.

- Regular Security Audits – Conducting periodic reviews to detect vulnerabilities and ensure compliance with best practices.

Data Integrity Rules You Should Know

Ensuring that information is accurate, consistent, and reliable is essential for any system that handles data. Various rules govern how information is stored, updated, and maintained to avoid errors, inconsistencies, and anomalies. Understanding these rules is critical for designing and implementing systems that preserve the quality and validity of data throughout its lifecycle.

Key Principles of Data Integrity

Here are some fundamental rules that ensure data remains trustworthy and free of corruption:

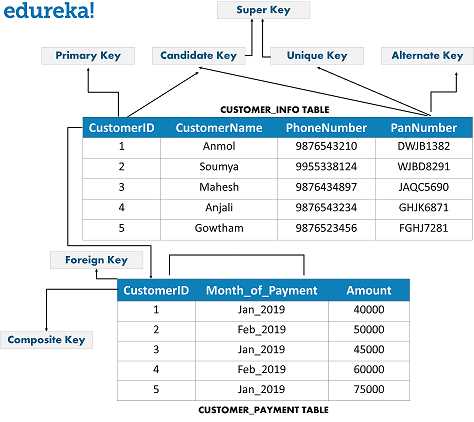

- Entity Integrity – Each record should be uniquely identifiable, typically through a primary key.

- Referential Integrity – Relationships between tables should be maintained, ensuring that foreign keys correctly link to primary keys.

- Domain Integrity – The values in each column must be restricted to a predefined set of valid values or a specific data type.

- User-Defined Integrity – Custom rules specific to the application’s requirements should be defined and enforced to maintain data quality.

Best Practices for Maintaining Data Integrity

To uphold data integrity, consider implementing the following practices:

- Input Validation – Ensure that data is validated before being entered into the system to avoid errors.

- Consistent Backups – Regularly back up data to protect against corruption or loss due to system failure.

- Enforcing Constraints – Use constraints such as unique keys, foreign keys, and check constraints to prevent invalid data from entering the system.

- Data Auditing – Implement audit trails to track changes and detect any unauthorized modifications.

Backup and Recovery Best Practices

Ensuring the safety and availability of data is vital for any organization. Implementing effective strategies for backing up and recovering information is crucial to prevent data loss and minimize downtime in case of unexpected failures. This section outlines the key practices for establishing a robust backup and recovery plan to maintain business continuity and data integrity.

Best Practices for Backup

Regular backups are essential to protect against data loss. Follow these best practices to ensure reliable backup procedures:

- Automate Backup Schedules – Set up automated backups to reduce the risk of human error and ensure that data is consistently saved at regular intervals.

- Backup Redundancy – Maintain multiple copies of backups in different locations, such as on-site and off-site or in cloud storage, to protect against physical damage or theft.

- Use Incremental Backups – Instead of backing up all data every time, perform incremental backups that only capture changes since the last backup, saving time and storage space.

- Monitor Backup Success – Regularly monitor and test backup jobs to ensure they are running correctly and the data is retrievable.

Best Practices for Recovery

When data loss occurs, a well-defined recovery strategy ensures minimal disruption. Here are essential recovery practices:

- Test Recovery Procedures – Regularly test the recovery process to ensure that data can be restored quickly and accurately when needed.

- Establish Recovery Time Objectives (RTO) – Define clear goals for how quickly data needs to be restored to meet business requirements and minimize downtime.

- Document Recovery Steps – Maintain detailed documentation of the recovery process, including the steps to restore data and troubleshoot common issues.

- Keep Backup Versions – Store different versions of backups, enabling recovery from various points in time in case of corruption or malicious activity.

SQL Query Optimization Tips

Writing efficient queries is essential for improving the performance of any data retrieval process. Poorly written queries can lead to slow response times, increased load on the server, and inefficient resource usage. This section provides practical tips for optimizing queries, ensuring that data is retrieved quickly and with minimal system impact.

Use Indexes Wisely

Indexes play a crucial role in speeding up query execution by allowing the system to locate data faster. However, improper use can slow things down.

- Create indexes on frequently queried columns – Columns that are frequently used in WHERE clauses or as join keys should have indexes to improve search performance.

- Avoid over-indexing – Too many indexes can degrade performance on insert, update, and delete operations. Choose wisely.

- Consider composite indexes – When multiple columns are often queried together, composite indexes can help improve query speed.

Optimize SELECT Statements

Efficient SELECT statements help in reducing unnecessary data retrieval and improving query performance.

- Select only required columns – Avoid using SELECT * as it retrieves all columns, even those not needed. Instead, specify only the columns you require.

- Limit the result set – Use LIMIT or TOP to retrieve only the necessary number of rows, especially when dealing with large datasets.

- Use JOINs effectively – Optimize JOIN clauses by ensuring the proper use of INNER JOIN, LEFT JOIN, etc., and ensure the joined columns are indexed.

Minimize Subqueries and Nested SELECTs

Subqueries and nested SELECTs can often be replaced with more efficient alternatives. They can be slow and hard to maintain if overused.

- Replace subqueries with JOINs – When possible, replace subqueries with JOINs to make the query more efficient.

- Use EXISTS instead of IN – When checking for existence in a subquery, using EXISTS is usually more efficient than IN, especially for large datasets.

Filter Data Early

Filtering the data as early as possible in the query process helps reduce the amount of data being processed, which can significantly improve performance.

- Apply WHERE clauses early – Filter out unnecessary rows before any expensive operations like sorting or aggregation.

- Use efficient operators – Some operators, like BETWEEN or IN, are more efficient than multiple OR conditions, so choose wisely.

Database Transactions and Their Impact

Transactions play a vital role in ensuring data integrity and consistency, particularly in systems where multiple operations are carried out simultaneously. Understanding how transactions work, as well as their effects on system performance and reliability, is crucial for maintaining efficient operations. This section explores the core aspects of transactions and their significant impact on overall system behavior.

What is a Transaction?

A transaction is a sequence of operations performed as a single logical unit of work. It ensures that either all of the operations succeed or none of them are applied. This is essential to maintain consistency and prevent partial updates that could lead to data anomalies.

| Transaction Property | Description |

|---|---|

| Atomicity | Ensures that a transaction is treated as a single, indivisible unit. If one part fails, the entire transaction fails. |

| Consistency | Guarantees that a transaction will bring the system from one valid state to another, maintaining predefined rules. |

| Isolation | Ensures that transactions do not interfere with each other. Even if multiple transactions are processed simultaneously, each transaction is executed in isolation. |

| Durability | Once a transaction is committed, its changes are permanent, even in the event of a system failure. |

Impact on System Performance

While transactions are essential for maintaining integrity, they can also affect system performance, especially when dealing with large volumes of data. Transaction handling often involves complex locking mechanisms, which can lead to delays or reduced throughput if not properly managed. Additionally, ensuring durability through logging and backups can increase the storage load and processing time.

- Increased Resource Consumption – Transactions that involve large amounts of data may require significant processing power and memory, especially when rollback operations are needed.

- Lock Contention – In multi-user environments, transactions may require locking data resources, which can lead to contention and delays in processing other tasks.

- Commit Overhead – Committing a transaction ensures durability but may introduce overhead in terms of I/O operations, especially for systems with high transaction volume.

Relational vs Non-relational Databases

The structure and approach to data storage can vary significantly between different systems. While some rely on a well-defined structure with tables and fixed schemas, others allow for more flexibility with unstructured or semi-structured data. Understanding the differences between these two models is crucial for selecting the right solution for specific use cases.

Relational Model Overview

The relational model organizes data into structured tables with predefined columns and data types. Each table typically represents an entity, and relationships between entities are established through keys. This approach ensures that data is consistent and easily queried using a standardized language. It is well-suited for applications where the integrity of data and complex queries are essential.

- Structured Data – Data is stored in tables, each consisting of rows and columns with defined data types.

- ACID Properties – Systems maintain consistency by following ACID (Atomicity, Consistency, Isolation, Durability) principles to ensure reliable transactions.

- Complex Queries – Supports powerful querying capabilities using SQL, allowing for complex joins and aggregations.

Non-relational Model Overview

In contrast, non-relational systems are designed for handling large volumes of unstructured or semi-structured data. They offer flexibility in terms of the data format, often using key-value stores, document-based storage, or graph databases. These systems prioritize scalability and performance over rigid schema enforcement, making them suitable for modern, large-scale applications.

- Flexible Schema – No predefined structure, allowing data to be stored in various formats such as JSON or XML.

- Scalability – Non-relational systems are designed for horizontal scaling, making them ideal for big data applications.

- Eventual Consistency – Many non-relational systems prioritize availability and partition tolerance over consistency, meaning data may not always be immediately consistent across nodes.

Data Modeling and Entity Relationships

Creating a blueprint for how information is structured and related within a system is essential for ensuring that data is both organized and accessible. A proper design outlines how various elements interact, ensuring consistency, accuracy, and efficiency in data retrieval. Understanding the relationships between entities is a key aspect of this design, helping to visualize connections and data flow.

Entity-Relationship Diagrams

Entity-Relationship (ER) diagrams are a common tool used to visually represent the relationships between different data elements in a system. They help in mapping out entities, their attributes, and how they are interconnected. By defining entities and the relationships between them, ER diagrams serve as a critical step in the design process, ensuring a clear understanding of data structure.

- Entities – Objects or concepts that have a distinct existence, such as “Customer” or “Product”. Each entity can have various attributes that describe its characteristics.

- Relationships – Connections between two or more entities, such as “Customer places Order” or “Product belongs to Category”. These relationships help to establish meaningful interactions between data elements.

- Cardinality – Defines the number of instances of one entity that can be associated with another, such as one-to-one, one-to-many, or many-to-many relationships.

Types of Relationships

Understanding the different types of relationships is vital for creating an effective model. These relationships define how data is interconnected and influence the design of the system, from how it is stored to how it can be queried.

- One-to-One – A relationship where one instance of an entity is associated with only one instance of another entity, such as a “Person” and their “Passport”.

- One-to-Many – A relationship where one instance of an entity can be associated with multiple instances of another, like a “Teacher” and many “Students”.

- Many-to-Many – A relationship where multiple instances of one entity can be associated with multiple instances of another, for example, “Students” and “Courses”.

Understanding Indexing and Search Performance

Efficient data retrieval is crucial in any system that stores large amounts of information. One of the key techniques for optimizing performance is the use of indexing, which allows for faster access to data. By organizing data in a way that supports quick searches, indexing can dramatically reduce the time it takes to find specific information.

Without proper indexing, searches may involve scanning entire datasets, leading to significant delays, especially as the volume of data grows. In contrast, with an effective index structure, data can be accessed in a fraction of the time, improving overall performance and user experience.

Types of Indexes

There are various types of indexing strategies that can be employed, each designed to improve search speed in different scenarios. Choosing the right index depends on the type of data and the nature of the queries being executed.

- B-tree Index – The most commonly used index structure, ideal for range queries and ordered data retrieval. It works by storing data in a balanced tree format, allowing for efficient searches.

- Hash Index – Used for exact match queries, hash indexes use a hash function to map data to specific locations in memory, providing very fast lookups for certain types of searches.

- Bitmap Index – Best suited for columns with a limited number of distinct values, such as “gender” or “status”. Bitmap indexes use a bit array to represent data, making them efficient for certain types of queries.

Impact on Search Performance

The use of indexing has a significant impact on search performance, but it also comes with trade-offs. While indexes can greatly speed up query execution, they can also consume additional resources and storage space. It’s important to strike a balance between query speed and system overhead when implementing indexing.

- Improved Query Speed – Proper indexing allows for faster data retrieval, reducing the time needed for search operations, particularly in large datasets.

- Resource Overhead – Indexes consume storage space and require processing power to maintain, especially when data is inserted, updated, or deleted.

- Optimizing for Specific Queries – Indexing strategies should be tailored to the types of queries most commonly executed, ensuring that the indexing structure aligns with the access patterns of the system.

Challenges in Database Scaling

As data grows and user demands increase, the need to scale systems becomes essential. However, expanding a system to handle larger volumes of information or more users introduces several complexities. The process of scaling is not just about adding more resources; it requires careful planning and understanding of underlying challenges that can affect performance, reliability, and cost.

One of the main hurdles in scaling involves maintaining consistent performance as the system grows. Adding more hardware or spreading the load across multiple servers can lead to diminishing returns if not done correctly. Additionally, scaling solutions must address data consistency, fault tolerance, and latency, all of which become more difficult as the size and complexity of the system increase.

Common Scaling Challenges

- Data Distribution – As systems scale, effectively distributing data across multiple locations or servers becomes more difficult. The challenge lies in ensuring that data is evenly spread without causing performance bottlenecks or data duplication issues.

- Consistency – Maintaining consistency across distributed systems is a critical challenge, especially when different parts of the system are being updated simultaneously. Ensuring that users always have access to the most up-to-date information requires sophisticated techniques such as distributed transactions and consensus protocols.

- Latency – As data moves across different servers or geographical regions, latency can increase, negatively affecting response times. Reducing this delay is a constant concern, especially for real-time applications.

- Fault Tolerance – Ensuring that the system remains operational in the event of hardware failure or network issues is vital. Achieving this requires redundancy and failover mechanisms, which can complicate scaling efforts.

Strategies for Addressing Scaling Issues

While scaling challenges are significant, there are several strategies that can help mitigate these issues and ensure that systems grow effectively without sacrificing performance or reliability.

- Sharding – Sharding involves splitting data into smaller, more manageable pieces that can be distributed across different servers. This allows for horizontal scaling, where additional servers are added to handle increasing workloads.

- Replication – Replicating data across multiple servers ensures that users can access data from the closest available server, reducing latency and improving redundancy in case of failure.

- Load Balancing – Implementing load balancers helps to distribute user requests evenly across servers, preventing any single server from becoming overwhelmed.

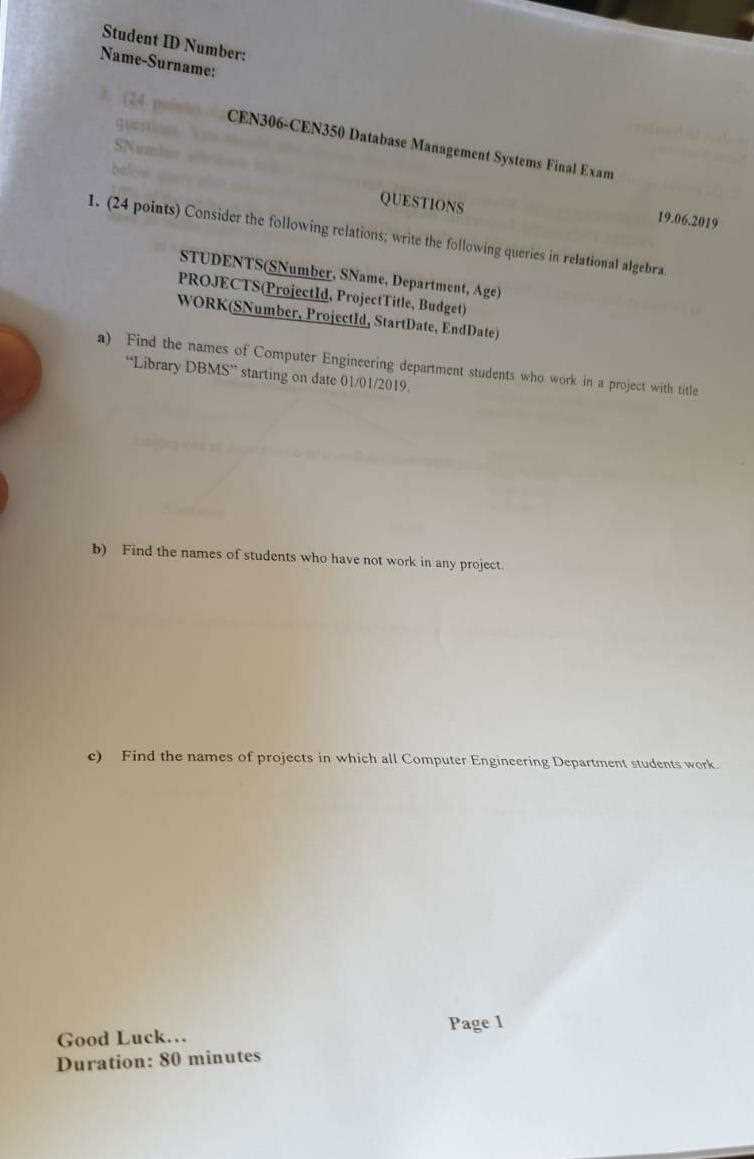

Preparing for Database Management Exams

Success in assessments related to information systems requires both a deep understanding of key concepts and the ability to apply them in practical scenarios. The preparation process involves a comprehensive review of theoretical foundations and hands-on practice to ensure a thorough grasp of the subject matter. Knowing how to organize your study plan, which areas to focus on, and how to tackle complex topics efficiently is critical for optimal results.

In order to excel, it is important to break down the preparation into manageable steps. This approach involves creating a study schedule, identifying the most important areas of focus, and engaging with a variety of study materials such as textbooks, online resources, and practice exercises. Building familiarity with common techniques and procedures is essential for boosting confidence and ensuring success on test day.

Effective Preparation Strategies

- Understand Core Concepts – Focus on grasping the underlying principles that drive key systems and processes. These fundamentals will provide a solid foundation for answering both basic and complex scenarios.

- Practice with Sample Tasks – Regularly engage with sample tasks or case studies to develop problem-solving skills. This practice will improve your ability to quickly analyze and address various challenges.

- Review Past Topics – Go over previously covered material to reinforce your memory. This will help you identify patterns and connections between different subjects and improve your ability to recall information under pressure.

Utilizing Study Materials

In addition to theoretical study, practical engagement with exercises and tools is vital. The more hands-on experience you have with tools and real-world scenarios, the better prepared you will be for any challenges. Here are a few resources to help guide your revision:

- Textbooks – Comprehensive guides will help you solidify your understanding of core principles.

- Online Courses – Interactive learning platforms provide video lectures and quizzes to test your knowledge in real-time.

- Study Groups – Discussing topics with peers can lead to better understanding and uncover different approaches to solving problems.